Category Archives: Virality

Distribution Economics

Posted by Hindol Datta

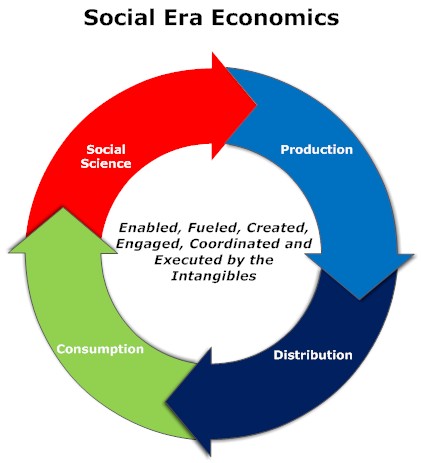

Distribution is a method to get products and services to the maximum number of customers efficiently.

Complexity science is the study of complex systems and the problems that are multi-dimensional, dynamic and unpredictable. It constitutes a set of interconnected relationships that are not always abiding to the laws of cause and effect, but rather the modality of non-linearity. Thomas Kuhn in his pivotal essay: The Structure of Scientific Revolutions posits that anomalies that arise in scientific method rise to the level where it can no longer be put on hold or simmer on a back-burner: rather, those anomalies become the front line for new methods and inquiries such that a new paradigm necessarily must emerge to supplant the old conversations. It is this that lays the foundation of scientific revolution – an emergence that occurs in an ocean of seeming paradoxes and competing theories. Contrary to a simple scientific method that seeks to surface regularities in natural phenomenon, complexity science studies the effects that rules have on agents. Rules do not drive systems toward a predictable outcome: rather it sets into motion a high density of interactions among agents such that the system coalesces around a purpose: that being necessarily that of survival in context of its immediate environment. In addition, the learnings that follow to arrive at the outcome is then replicated over periods to ensure that the systems mutate to changes in the external environment. In theory, the generative rules leads to emergent behavior that displays patterns of parallelism to earlier known structures.

For any system to survive and flourish, distribution of information, noise and signals in and outside of a CPS or CAS is critical. We have touched at length that the system comprises actors and agents that work cohesively together to fulfill a special purpose. Specialization and scale matter! How is a system enabled to fulfill their purpose and arrive at a scale that ensures long-term sustenance? Hence the discussion on distribution and scale which is a salient factor in emergence of complex systems that provide the inherent moat of “defensibility” against internal and external agents working against it.

Distribution, in this context, refers to the quality and speed of information processing in the system. It is either created by a set of rules that govern the tie-ups between the constituent elements in the system or it emerges based on a spontaneous evolution of communication protocols that are established in response to internal and external stimuli. It takes into account the available resources in the system or it sets up the demands on resource requirements. Distribution capabilities have to be effective and depending upon the dynamics of external systems, these capabilities might have to be modified effectively. Some distribution systems have to be optimized or organized around efficiency: namely, the ability of the system to distribute information efficiently. On the other hand, some environments might call for less efficiency as the key parameter, but rather focus on establishing a scale – an escape velocity in size and interaction such that the system can dominate the influence of external environments. The choice between efficiency and size is framed by the long-term purpose of the system while also accounting for the exigencies of ebbs and flows of external agents that might threaten the system’s existence.

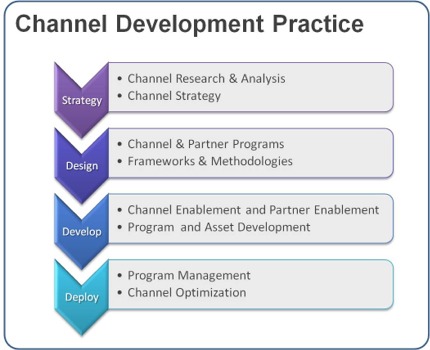

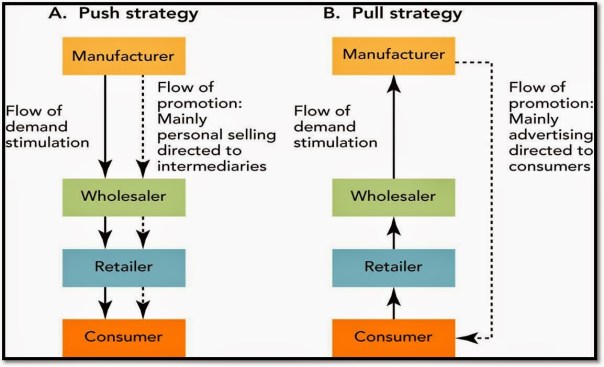

Since all systems are subject to the laws of entropy and the impact of unintended consequences, strategies have to be orchestrated accordingly. While it is always naïve to assume exactitude in the ultimate impact of rules and behavior, one would surmise that such systems have to be built around the fault lines of multiple roles for agents or group of agents to ensure that the system is being nudged, more than less, toward the desired outcome. Hence, distribution strategy is the aggregate impact of several types of channels of information that are actively working toward a common goal. The idea is to establish multiple channels that invoke different strategies while not cannibalizing or sabotaging an existing set of channels. These mutual exclusive channels have inherent properties that are distinguished by the capacity and length of the channels, the corresponding resources that the channels use and the sheer ability to chaperone the system toward the overall purpose.

The complexity of the purpose and the external environment determines the strategies deployed and whether scale or efficiency are the key barometers for success. If a complex system must survive and hopefully replicate from strength to greater strength over time, size becomes more paramount than efficiency. Size makes up for the increased entropy which is the default tax on the system, and it also increases the possibility of the system to reach the escape velocity. To that end, managing for scale by compromising efficiency is a perfectly acceptable means since one is looking at the system with a long-term lens with built-in regeneration capabilities. However, not all systems might fall in this category because some environments are so dynamic that planning toward a long-term stability is not practical, and thus one has to quickly optimize for increased efficiency. It is thus obvious that scale versus efficiency involves risky bets around how the external environment will evolve. We have looked at how the systems interact with external environments: yet, it is just as important to understand how the actors work internally in a system that is pressed toward scale than efficiency, or vice versa. If the objective is to work toward efficiency, then capabilities can be ephemeral: one builds out agents and actors with capabilities that are mission-specific. On the contrary, scale driven systems demand capabilities that involve increased multi-tasking abilities, the ability to develop and learn from feedback loops, and to prime the constraints with additional resources. Scaling demand acceleration and speed: if a complex system can be devised to distribute information and learning at an accelerating pace, there is a greater likelihood that this system would dominate the environment.

Scaling systems can be approached by adding more agents with varying capabilities. However, increased number of participants exponentially increase the permutations and combinations of channels and that can make the system sluggish. Thus, in establishing the purpose and the subsequent design of the system, it is far more important to establish the rules of engagement. Further, the rules might have some centralized authority that will directionally provide the goal while other rules might be framed in a manner to encourage a pure decentralization of authority such that participants act quickly in groups and clusters to enable execution toward a common purpose.

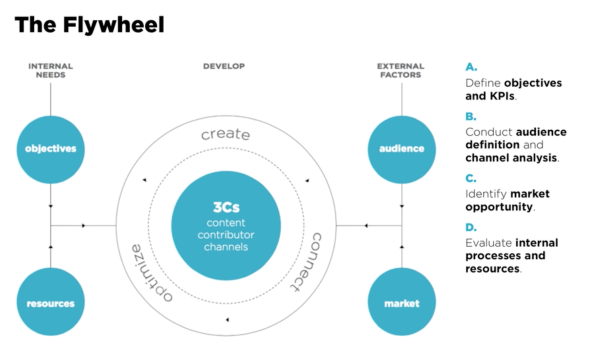

In business we are surrounded by uncertainty and opportunities. It is how we calibrate around this that ultimately reflects success. The ideal framework at work would be as follows:

- What are the opportunities and what are the corresponding uncertainties associated with the opportunities? An honest evaluation is in order since this is what sets the tone for the strategic framework and direction of the organization.

- Should we be opportunistic and establish rules that allow the system to gear toward quick wins: this would be more inclined toward efficiencies. Or should we pursue dominance by evaluating our internal capability and the probability of winning and displacing other systems that are repositioning in advance or in response to our efforts? At which point, speed and scale become the dominant metric and the resources and capabilities and the set of governing rules have to be aligned accordingly.

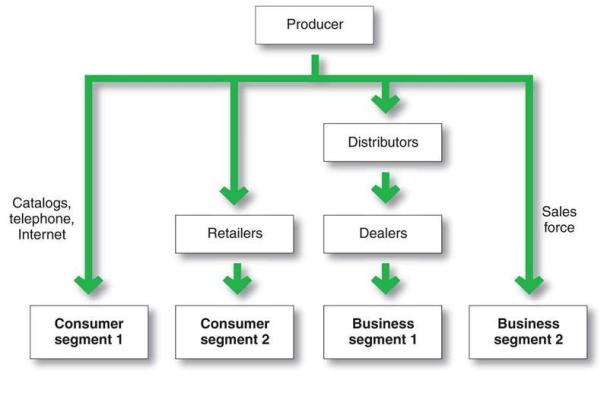

- How do we craft multiple channels within and outside of the system? In business lingo, that could translate into sales channels. These channels are selling products and services and can be adding additional value along the way to the existing set of outcomes that the system is engineered for. The more the channels that are mutually exclusive and clearly differentiated by their value propositions, the stronger the system and the greater the ability to scale quickly. These antennas, if you will, also serve to be receptors for new information which will feed data into the organization which can subsequently process and reposition, if the situation so warrants. Having as many differentiated antennas comprise what constitutes the distribution strategy of the organization.

- The final cut is to enable a multi-dimensional loop between external and internal system such that the system expands at an accelerating pace without much intervention or proportionate changes in rules. In other words, system expands autonomously – this is commonly known as the platform effect. Scale does not lead to platform effect although the platform effect most definitely could result in scale. However, scale can be an important contributor to platform effect, and if the latter gets root, then the overall system achieves efficiency and scale in the long run.

Posted in Business Process, Complexity, distribution, growth, Innovation, Leadership, Learning Organization, Management Models, Model Thinking, network theory, Organization Architecture, Virality, Vision

Comments Off on Distribution Economics

Tags: channel, design, distribution, economics, environment, flywheel, innovation, network, platform, systems, uncertainty

Network Theory and Network Effects

Posted by Hindol Datta

Complexity theory needs to be coupled with network theory to get a more comprehensive grasp of the underlying paradigms that govern the outcomes and morphology of emergent systems. In order for us to understand the concept of network effects which is commonly used to understand platform economics or ecosystem value due to positive network externalities, we would like to take a few steps back and appreciate the fundamental theory of networks. This understanding will not only help us to understand complexity and its emergent properties at a low level but also inform us of the impact of this knowledge on how network effects can be shaped to impact outcomes in an intentional manner.

There are first-order conditions that must be met to gauge whether the subject of the observation is a network. Firstly, networks are all about connectivity within and between systems. Understanding the components that bind the system would be helpful. However, do keep in mind that complexity systems (CPS and CAS) might have emergent properties due to the association and connectivity of the network that might not be fully explained by network theory. All the same, understanding networking theory is a building block to understanding emergent systems and the outcome of its structure on addressing niche and macro challenges in society.

Networks operates spatially in a different space and that has been intentionally done to allow some simplification and subsequent generalization of principles. The geometry of network is called network topology. It is a 2D perspective of connectivity.

Networks are subject to constraints (physical resources, governance constraint, temporal constraints, channel capacity, absorption and diffusion of information, distribution constraint) that might be internal (originated by the system) or external (originated in the environment that the network operates in).

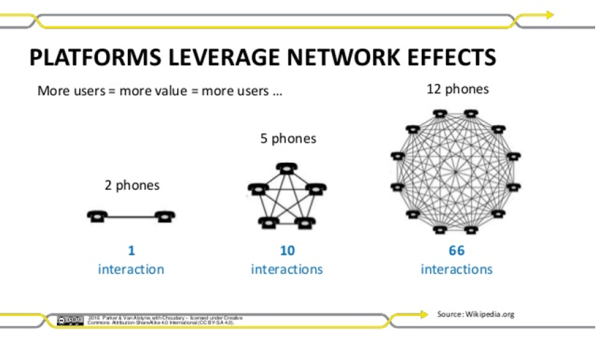

Finally, there is an inherent non-linearity impact in networks. As nodes increase linearly, connections will increase exponentially but might be subject to constraints. The constraints might define how the network structure might morph and how information and signals might be processed differently.

Graph theory is the most widely used tool to study networks. It consists of four parts: vertices which represent an element in the network, edges refer to relationship between nodes which we call links, directionality which refers to how the information is passed ( is it random and bi-directional or follows specific rules and unidirectional), channels that refer to bandwidth that carry information, and finally the boundary which establishes specificity around network operations. A graph can be weighted – namely, a number can be assigned to each length to reflect the degree of interaction or the strength of resources or the proximity of the nodes or the ordering of discernible clusters.

The central concept of network theory thus revolves around connectivity between nodes and how non-linear emergence occurs. A node can have multiple connections with other node/nodes and we can weight the node accordingly. In addition, the purpose of networks is to pass information in the most efficient manner possible which relays into the concept of a geodesic which is either the shortest path between two nodes that must work together to achieve a purpose or the least number of leaps through links that information must negotiate between the nodes in the network.

Technically, you look for the longest path in the network and that constitutes the diameter while you calculate the average path length by examining the shortest path between nodes, adding all of those paths up and then dividing by the number of pairs. Significance of understanding the geodesic allows an understanding of the size of the network and throughput power that the network is capable of.

Nodes are the atomic elements in the network. It is presumed that its degree of significance is related to greater number of connections. There are other factors that are important considerations: how adjacent or close are the nodes to one another, does some nodes have authority or remarkable influence on others, are nodes positioned to be a connector between other nodes, and how capable are the nodes in absorbing, processing and diffusing the information across the links or channels. How difficult is it for the agents or nodes in the network to make connections? It is presumed that if the density of the network is increased, then we create a propensity in the overall network system to increase the potential for increased connectivity.

As discussed previously, our understanding of the network is deeper once we understand the elements well. The structure or network topology is represented by the graph and then we must understand size of network and the patterns that are manifested in the visual depiction of the network. Patterns, for our purposes, might refer to clusters of nodes that are tribal or share geographical proximity that self-organize and thus influence the structure of the network. We will introduce a new term homophily where agents connect with those like themselves. This attribute presumably allows less resources needed to process information and diffuse outcomes within the cluster. Most networks have a cluster bias: in other words, there are areas where there is increased activity or increased homogeneity in attributes or some form of metric that enshrines a group of agents under one specific set of values or activities. Understanding the distribution of cluster and the cluster bias makes it easier to influence how to propagate or even dismantle the network. This leads to an interesting question: Can a network that emerges spontaneously from the informal connectedness between agents be subjected to some high dominance coefficient – namely, could there be nodes or links that might exercise significant weight on the network?

The network has to align to its environment. The environment can place constraints on the network. In some instances, the agents have to figure out how to overcome or optimize their purpose in the context of the presence of the environmental constraints. There is literature that suggests the existence of random networks which might be an initial state, but it is widely agreed that these random networks self-organize around their purpose and their interaction with its environment. Network theory assigns a number to the degree of distribution which means that all or most nodes have an equivalent degree of connectivity and there is no skewed influence being weighed on the network by a node or a cluster. Low numbers assigned to the degree of distribution suggest a network that is very democratic versus high number that suggests centralization. To get a more practical sense, a mid-range number assigned to a network constitutes a decentralized network which has close affinities and not fully random. We have heard of the six degrees of separation and that linkage or affinity is most closely tied to a mid-number assignment to the network.

We are now getting into discussions on scale and binding this with network theory. Metcalfe’s law states that the value of a network grows as a square of the number of the nodes in the network. More people join the network, the more valuable the network. Essentially, there is a feedback loop that is created, and this feedback loop can kindle a network to grow exponentially. There are two other topics – Contagion and Resilience. Contagion refers to the ability of the agents to diffuse information. This information can grow the network or dismantle it. Resilience refers to how the network is organized to preserve its structure. As you can imagine, they have huge implications that we see. How do certain ideas proliferate over others, how does it cluster and create sub-networks which might grow to become large independent networks and how it creates natural defense mechanisms against self-immolation and destruction?

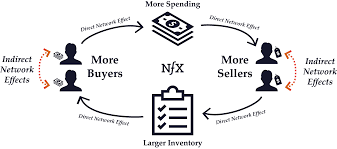

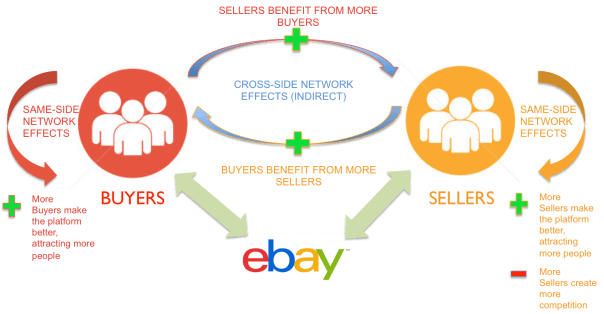

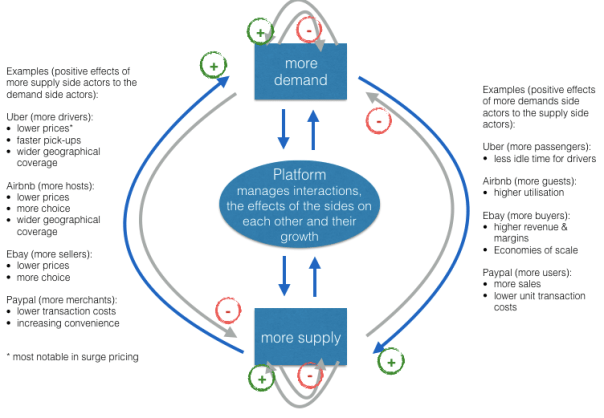

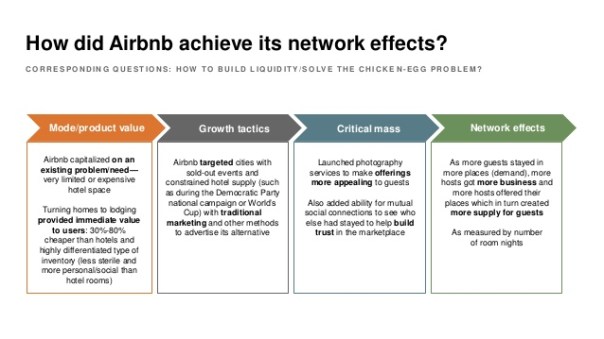

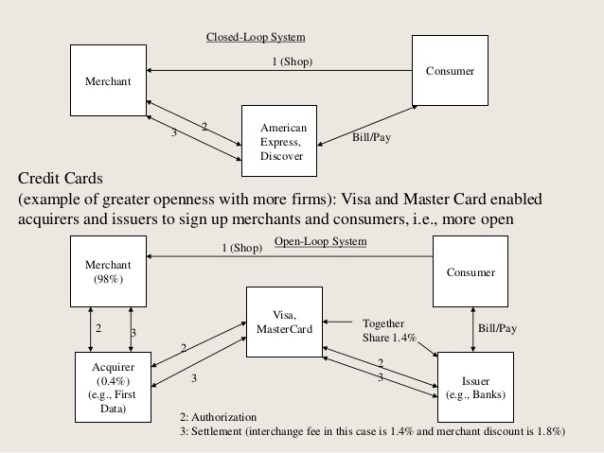

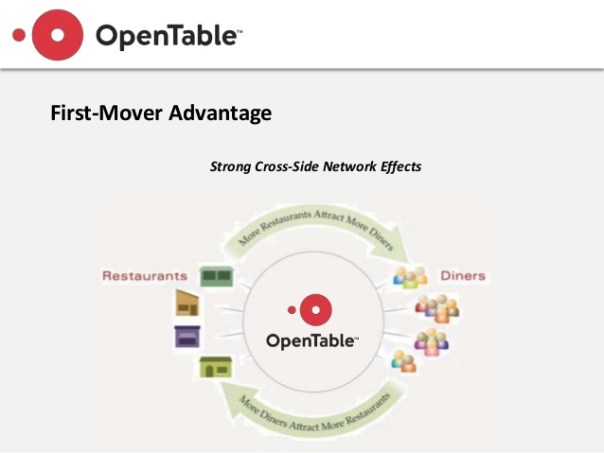

Network effect is commonly known as externalities in economics. It is an effect that is external to the transaction but influences the transaction. It is the incremental benefit gained by an existing user for each new user that joins the network. There are two types of network effects: Direct network effects and Indirect network effect. Direct network effects are same side effects. The value of a service goes up as the number of users goes up. For example, if more people have phones, it is useful for you to have a phone. The entire value proposition is one-sided. Indirect networks effects are multi-sided. It lends itself to our current thinking around platforms and why smart platforms can exponentially increase the network. The value of the service increases for one user group when a new user group joins the network. Take for example the relationship between credit card banks, merchants and consumers. There are three user groups, and each gather different value from the network of agents that have different roles. If more consumers use credit cards to buy, more merchants will sign up for the credit cards, and as more merchants sign up – more consumers will sign up with the bank to get more credit cards. This would be an example of a multi-sided platform that inherently has multi-sided network effects. The platform inherently gains significant power such that it becomes more valuable for participants in the system to join the network despite the incremental costs associated with joining the network. Platforms that are built upon effective multi-sided network effects grow quickly and are generally sustainable. Having said that, it could be just as easy that a few dominant bad actors in the network can dismantle and unravel the network completely. We often hear of the tipping point: namely, that once the platform reaches a critical mass of users, it would be difficult to dismantle it. That would certainly be true if the agents and services are, in the aggregate, distributed fairly across the network: but it is also possible that new networks creating even more multi-sided network effects could displace an entrenched network. Hence, it is critical that platform owners manage the quality of content and users and continue to look for more opportunities to introduce more user groups to entrench and yet exponentially grow the network.

Posted in Analytics, Business Process, Complexity, exponential, growth, Innovation, Leadership, Learning Organization, Learning Process, Management Models, Model Thinking, network theory, Social Network, Social Systems, startup, Virality

Comments Off on Network Theory and Network Effects

Tags: business cases, design, environment, experiments, innovation, network, platform, startup, system thinking, uncertainty

Comparative Literature and Business Insights

Posted by Hindol Datta

“Literature is the art of discovering something extraordinary about ordinary people, and saying with ordinary words something extraordinary.” – Boris Pasternak

“It is literature which for me opened the mysterious and decisive doors of imagination and understanding. To see the way others see. To think the way others think. And above all, to feel.” – Salman Rushdie

There is a common theme that cuts across literature and business. It is called imagination!

Great literature seeds the mind to imagine faraway places across times and unique cultures. When we read a novel, we are exposed to complex characters that are richly defined and the readers’ subjective assessment of the character and the context defines their understanding of how the characters navigate the relationships and their environment. Great literature offers many pauses for thought, and long after the book is read through … the theme gently seeps in like silt in the readers’ cumulative experiences. It is in literature that the concrete outlook of humanity receives its expression. Comparative literature which is literature assimilated across many different countries enable a diversity of themes that intertwine into the readers’ experiences augmented by the reality of what they immediately experience – home, work, etc. It allows one to not only be capable of empathy but also … to craft out the fluid dynamics of ever changing concepts by dipping into many different types of case studies of human interaction. The novel and the poetry are the bulwarks of literature. It is as important to study a novel as it is to enjoy great poetry. The novel characterizes a plot/(s) and a rich tapestry of actions of the characters that navigates through these environments: the poetry is the celebration of the ordinary into extraordinary enactments of the rhythm of the language that transport the readers, through images and metaphor, into single moments. It breaks the linear process of thinking, a perpendicular to a novel.

Business insights are generally a result of acute observation of trends in the market, internal processes, and general experience. Some business schools practice case study method which allows the student to have a fairly robust set of data points to fall back upon. Some of these case studies are fairly narrow but there are some that gets one to think about personal dynamics. It is a fact that personal dynamics and biases and positioning plays a very important role in how one advocates, views, or acts upon a position. Now the schools are layering in classes on ethics to understand that there are some fundamental protocols of human nature that one has to follow: the famous adage – All is fair in love and war – has and continues to lose its edge over time. Globalization, environmental consciousness, individual rights, the idea of democracy, the rights of fair representation, community service and business philanthropy are playing a bigger role in today’s society. Thus, business insights today are a result of reflection across multiple levels of experience that encompass not the company or the industry …but encompass a broader array of elements that exercises influence on the company direction. In addition, one always seeks an end in mind … they perpetually embrace a vision that is impacted by their judgments, observations and thoughts. Poetry adds the final wing for the flight into this metaphoric realm of interconnections – for that is always what a vision is – a semblance of harmony that inspires and resurrects people to action.

I contend that comparative literature is a leading indicator that allows a person to get a feel for the general direction of the express and latent needs of people. Furthermore, comparative literature does not offer a solution. Great literature does not portend a particular end. They leave open a multitude of possibilities and what-ifs. The reader can literally transport themselves into the environment and wonder at how he/she would act … the jump into a vicarious existence steeps the reader into a reflection that sharpens the intellect. This allows the reader in a business to be better positioned to excavate and address the needs of current and potential customers across boundaries.

“Literature gives students a much more realistic view of what’s involved in leading” than many business books on leadership, said the professor. “Literature lets you see leaders and others from the inside. You share the sense of what they’re thinking and feeling. In real life, you’re usually at some distance and things are prepared, polished. With literature, you can see the whole messy collection of things that happen inside our heads.” – Joseph L. Badaracco, the John Shad Professor of Business Ethics at Harvard Business School (HBS)

Viral Coefficient – Quick Study and Social Network Implications

Posted by Hindol Datta

Virality is a metric that has been borrowed from the field of epidemiology. It pertains to how quickly an element or content spreads through the population. Thus, these elements could be voluntarily or involuntarily adopted. Applying it to the world of digital content, I will restrict my scope to that of voluntary adoption by participants who have come into contact with the elements.

The two driving factors around virality relate to Viral Coefficient and Viral Cycle Time. They are mutually exclusive concepts, but once put together in a tight system within the context of product design for dissemination, it becomes a very powerful customer acquisition tool. However, this certainly does not mean that increased virality will lead to increased profits. We will touch upon this subject later on for in doing so we have to assess what profit means – in other words, the various components in the profit equation and whether virality has any consequence to the result. Introducing profit motive in a viral environment could, on the other hand, lead to counterfactual consequences and may depress the virality coefficient and entropy the network.

What is the Viral Coefficient?

You will often hear the Viral Coefficient referred to as K. For example, you start an application that you put out on the web as a private beta. You offer them the tool to invite their contacts to register for the application. For example, if you start off with 10 private beta testers, and each of them invites 10 friends and let us say 20% of the 10 friends actually convert to be a registered user. What does this mean mathematically as we step through the first cycle? Incrementally, that would mean 10*10*20% = 20 new users that will be generated by your initial ten users. So at the end of the first cycle, you would have 30 users. But bear in mind that this is the first cycle only. Now the 30 users have the Invite tool to send to 10 additional users of which 10% convert. What does that translate to? It would be 30*10*10% =30 additional people over the base of 30 of your current installed based. That means now you have a total of 60 users. So you have essentially sent out 100 invites and then another 300 invites for a total of 400 invites — you have converted 50 users out of the 400 invites which translates to a 12.5% conversion rate through the second cycle. In general, you will find that as you step through more cycles, your conversion percentage will actually decay. In the first cycle, the viral coefficient (K) = 2 (Number of Invites (10) * conversion percentage (20%)), and through the incremental second cycle (K) = 10% (Number of Invites (10) * conversion percentage (10%)), and the total viral coefficient (K) is 1. If the K < 1, the system lends itself to decay … the pace of decay being a function of how low the viral coefficient is. On the other hand if you have K>1 or 100%, then your system will grow fairly quickly. The actual growth will be based on you starting base. A large starting base with K>1 is a fairly compelling model for growth.

The Viral Cycle Time:

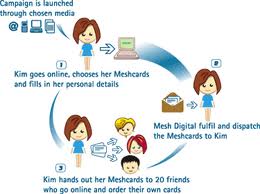

This is the response time of a recipient to act upon an invite and send it out to their connection. In other words, using the above example, when your 10 users send out 10 invites and they are immediately acted upon ( for modeling simplicity, immediate means getting the invite and turning it around and send additional invites immediately and so on and on), that constitutes the velocity of the viral cycle otherwise known as Viral Cycle time. The growth and adoption of your product is a function of the viral cycle time. In other words, the longer the viral cycle time, the growth is significantly lower than a shorter viral cycle time. For example if you reduce viral cycle time by ½, you may experience 100X+ growth. Thus, it is another important lever to manage the growth and adoption of the application.

So when one speaks of Virality, we have to consider the Virality Coefficient and the Viral Cycle Time. These are the key components and the drivers to these components may have dependencies, but there could be some mutually exclusive underlying value drivers. Virality hence must be built into the product. It is often common to think that marketing creates virality. I believe that marketing certainly does influence virality but it is more important, if and when possible, to design the product with the viral hooks.

Standing Ovation Problem and Product Design

Posted by Hindol Datta

When you seed another social network into an ecosystem, you are, for the lack of a better word, embracing the tenets of a standing ovation model. The standing ovation model has become, as of late, the fundamental rubric upon which several key principles associated with content, virality, emulation, cognitive psychology, location principles, social status and behavioral impulse coalesce together in various mixes to produce what would be the diffusion of the social network principles as it ripples through the population it contacts. Please keep in mind that this model provides the highest level perspective that fields the trajectory of the social network dynamics. There are however a number of other models that are more tactical and borrowed from the fields of epidemiology and growth economics that will address important elements like the tipping points that generally play a large role in essentially creating that critical mass of crowdswell, which once attained is difficult to reverse, unless of course there are legislative and technology reversals that may defeat the dynamics.

So I will focus, in this post, the importance of standing ovation model. The basic SOP (Standing Ovation problem) can be simply stated as: A lecture or content display in an audience ends and the audience starts to applaud. The applause builds and tentatively, a few audience may members may or may not decide to stand. This could be abstracted in our world as an audience that is a passive user versus an active user in the ecosystem. The question that emerges is whether a standing ovation ensues or does the enthusiasm fizzle. SOP problems were first studied by Schelling.

In the simplest form of the model, when a performance or content consumption ends, an audience member must decide whether or not to stand. Now if the decision to stand is made without any consideration of the dynamics of the other people in the audience, then there is no problem per se and the SOP model does not come into play. However, if the random person is on the fence or is reluctant or may not have enjoyed the content … would the behavioral and location dynamics of the other participants in the audience influence him enough to stand even against his better judgment. The latter case is an example of information cascade or what is often called the “following the herd” mentality which essentially means that the individuals abnegates his position in favor of the collective judgment of the people around him. So this model and its application to social networks is best explained by looking at the following elements:

1. Group Response: If you are part of a group and you have your set of judgments governing your decision to stand up, then are you willing to reserve those judgments to be part of group behavior. At what point is a person willing to seed doubt and play along with a larger response. This has important implications. For example, if you are in an audience and a member of a group that you know well, and a certain threshold quantity in the group responds favorably to the content, there may be some likelihood that you would follow along. On the other hand, if you are an individual in an audience, albeit not connected to a group, there is still some chance of you to follow along as long as it meets some threshold for example – if I can see about people stand, I will follow along. In a known group which may constitute you being a participant among five people, even if 3 people stand, you may stand up even though it does not meet your random 10 people formula. This has important implications in cohorts, building groups, providing tools and computational agents in social networks and dynamics to incline a passive consumer to an active consumer.

2. Visibility to the Group: Location is an important piece of the SOP. Imagine a theater. If you are the first one in the center of all rows, you will, unless you turn back, not be cognizant of people’s reactions. Thus, your response to the content will be preliminarily fed by the intensity of your reaction to the content. On the other hand, if you are seated behind, you will have a broader perspective and you may respond to the dynamics of how the others respond to the content. What does this mean in social dynamics and introducing more active participation? Simply that you have to again provide the underlying mechanisms that allow people to respond at a temporal level ( a short time frame) to how a threshold mass of people have responded. Affording that one person visibility that would follow up with a desired response would create the information cascade that would culminate in a large standing ovation.

3. Beachhead Response: An audience will have bias. That is another presumption in the model. They will carry certain judgments prior to a show – one of which is that the people in front who have bought the expensive seats are influential and have “celebrity” status. Now depending on the weight of this bias, a random person, in spite a positive audience response, may not respond positively if the front rows do not respond positively. Thus, he is heavily inclined to discounting the general audience threshold toward a threshold associated with a select group that could result in different behavior. However, it is also possible that if the beachhead responds positively and not the audience, the random person may react positively despite the general threshold dynamics. So the point being that designing and developing products in a social environment have to be able to measure such biases, see responses and then introduce computational agents to create fuller participation.

Thus, the SOP is the fundamental crux around which a product design has to be considered. In that, to the extent possible, you bring in a person who belongs to a group, has the spatial visibility, and responds accordingly would thus make for an enduring response to content. Of course, the content is a critical component as well for poor content, regardless of all ovation agents introduced, may not trigger a desired response. So content is as much an important pillar as is the placing of the random person with their thresholds of reaction. So build the content, design the audience, and design the placement of the random person in order that all three coalesce to make an active participant result out of a passive audience.

Posted in Leadership, Learning Organization, Organization Architecture, Ovation, Social Dynamics, Social Gaming, Social Network, Social Systems, Virality

Tags: applause, audience, copy, emulate, group, leadership, motivation, product design, recognition, social dynamics, social network, social networks, social normns, virality