Blog Archives

The Law of Unintended Consequences

Posted by Hindol Datta

The Law of Unintended Consequence is that the actions of a central body that might claim omniscient, omnipotent and omnivalent intelligence might, in fact, lead to consequences that are not anticipated or unintended.

The concept of the Invisible Hand as introduced by Adam Smith argued that it is the self-interest of all the market agents that ultimately create a system that maximizes the good for the greatest amount of people.

Robert Merton, a sociologist, studied the law of unintended consequence. In an influential article titled “The Unanticipated Consequences of Purposive Social Action,” Merton identified five sources of unanticipated consequences.

Ignorance makes it difficult and impossible to anticipate the behavior of every element or the system which leads to incomplete analysis.

Errors that might occur when someone uses historical data and applies the context of history into the future. Linear thinking is a great example of an error that we are wrestling with right now – we understand that there are systems, looking back, that emerge exponentially but it is hard to decipher the outcome unless one were to take a leap of faith.

Biases work its way into the study as well. We study a system under the weight of our biases, intentional or unintentional. It is hard to strip that away even if there are different bodies of thought that regard a particular system and how a certain action upon the system would impact it.

Weaved with the element of bias is the element of basic values that may require or prohibit certain actions even if the long-term impact is unfavorable. A good example would be the toll gates established by the FDA to allow drugs to be commercialized. In its aim to provide a safe drug, the policy might be such that the latency of the release of drugs for experiments and commercial purposes are so slow that many patients who might otherwise benefit from the release of the drug lose out.

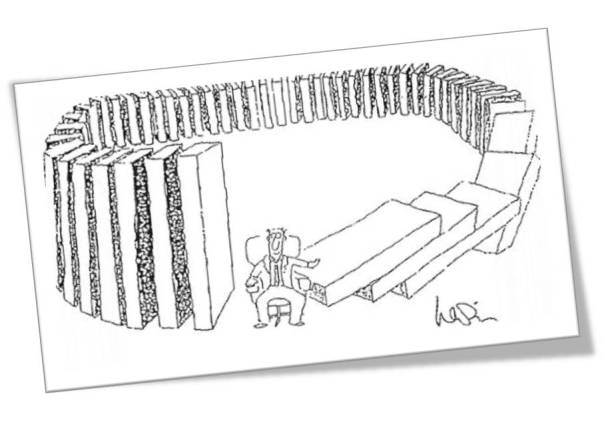

Finally, he discusses the self-fulfilling prophecy which suggests that tinkering with the elements of a system to avert a catastrophic negative event might in actuality result in the event.

It is important however to acknowledge that unintended consequences do not necessarily lead to a negative outcome. In fact, there are could be unanticipated benefits. A good example is Viagra which started off as a pill to lower blood pressure, but one discovered its potency to solve erectile dysfunctions. The discovery that ships that were sunk became the habitat and formation of very rich coral reefs in shallow waters that led scientists to make new discoveries in the emergence of flora and fauna of these habitats.

If there are initiatives exercised that are considered “positive initiative” to influence the system in a manner that contribute to the greatest good, it is often the case that these positive initiatives might prove to be catastrophic in the long term. Merton calls the cause of this unanticipated consequence as something called the product of the “relevance paradox” where decision makers thin they know their areas of ignorance regarding an issue, obtain the necessary information to fill that ignorance gap but intentionally or unintentionally neglect or disregard other areas as its relevance to the final outcome is not clear or not lined up to values. He goes on to argue, in a nutshell, that unintended consequences relate to our hubris – we are hardwired to put our short-term interest over long term interest and thus we tinker with the system to surface an effect which later blow back in unexpected forms. Albert Camus has said that “The evil in the world almost always comes of ignorance, and good intentions may do as much harm as malevolence if they lack understanding.”

An interesting emergent property that is related to the law of unintended consequence is the concept of Moral Hazard. It is a concept that individuals have incentives to alter their behavior when their risk or bad decision making is borne of diffused among others. For example:

If you have an insurance policy, you will take more risks than otherwise. The cost of those risks will impact the total economics of the insurance and might lead to costs being distributed from the high-risk takers to the low risk takers.

How do the conditions of the moral hazard arise in the first place? There are two important conditions that must hold. First, one party has more information than another party. The information asymmetry thus creates gaps in information and that creates a condition of moral hazard. For example, during 2006 when sub-prime mortgagors extended loans to individuals who had dubitable income and means to pay. The Banks who were buying these mortgages were not aware of it. Thus, they ended up holding a lot of toxic loans due to information asymmetry. Second, is the existence of an understanding that might affect the behavior of two agents. If a child knows that they are going to get bailed out by the parents, he/she might take some risks that he/she would otherwise might not have taken.

To counter the possibility of unintended consequences, it is important to raise our thinking to second-order thinking. Most of our thinking is simplistic and is based on opinions and not too well grounded in facts. There are a lot of biases that enter first order thinking and in fact, all of the elements that Merton touches on enters it – namely, ignorance, biases, errors, personal value systems and teleological thinking. Hence, it is important to get into second-order thinking – namely, the reasoning process is surfaced by looking at interactions of elements, temporal impacts and other system dynamics. We had mentioned earlier that it is still difficult to fully wrestle all the elements of emergent systems through the best of second-order thinking simply because the dynamics of a complex adaptive system or complex physical system would deny us that crown of competence. However, this fact suggests that we step away from simple, easy and defendable heuristics to measure and gauge complex systems.

Posted in Business Process, emergent systems, Learning Organization, Learning Process, Management Models, Order, Social Dynamics, Unitended Consequence

Comments Off on The Law of Unintended Consequences

Tags: CAS, Complexity, experiments, innovation, intuition, open source, slippery slope, Systems design, Systems Thinking, uncertainty, unintended consequence