Category Archives: Business Process

The Finance Playbook for Scaling Complexity Without Chaos

From Controlled Growth to Operational Grace

Somewhere between Series A optimism and Series D pressure sits the very real challenge of scale. Not just growth for its own sake but growth with control, precision, and purpose. A well-run finance function becomes less about keeping the lights on and more about lighting the runway. I have seen it repeatedly. You can double ARR, but if your deal desk, revenue operations, or quote-to-cash processes are even slightly out of step, you are scaling chaos, not a company.

Finance does not scale with spreadsheets and heroics. It scales with clarity. With every dollar, every headcount, and every workflow needing to be justified in terms of scale, simplicity must be the goal. I recall sitting in a boardroom where the CEO proudly announced a doubling of the top line. But it came at the cost of three overlapping CPQ systems, elongated sales cycles, rogue discounting, and a pipeline no one trusted. We did not have a scale problem. We had a complexity problem disguised as growth.

OKRs Are Not Just for Product Teams

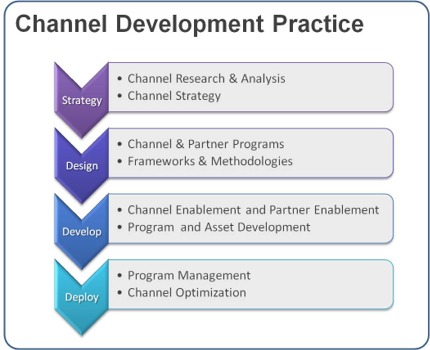

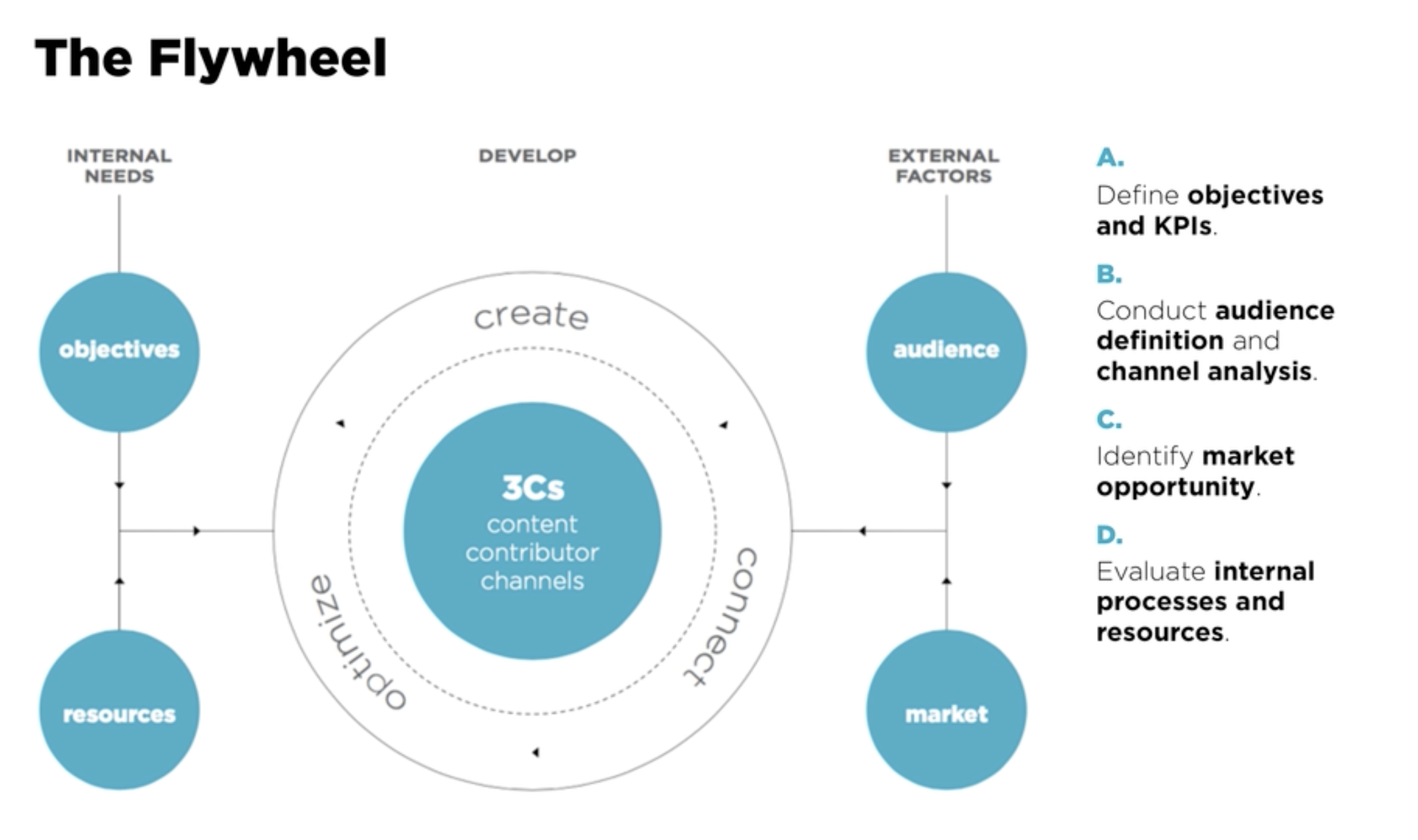

When finance is integrated into company OKRs, magic happens. We begin aligning incentives across sales, legal, product, and customer success teams. Suddenly, the sales operations team is not just counting bookings but shaping them. Deal desk isn’t just a speed bump before legal review, but a value architect. Our quote-to-cash process is no longer a ticketing system but a flywheel for margin expansion.

At a Series B company, their shift began by tying financial metrics directly to the revenue team’s OKRs. Quota retirement was not enough. They measured the booked gross margin. Customer acquisition cost. Implementation of velocity. The sales team was initially skeptical but soon began asking more insightful questions. Deals that initially appeared promising were flagged early. Others that seemed too complicated were simplified before they even reached RevOps. Revenue is often seen as art. But finance gives it rhythm.

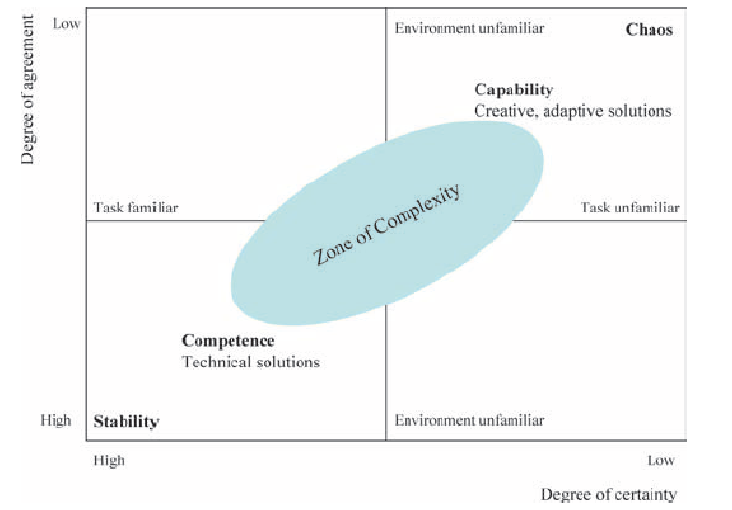

Scaling Complexity Despite the Chaos

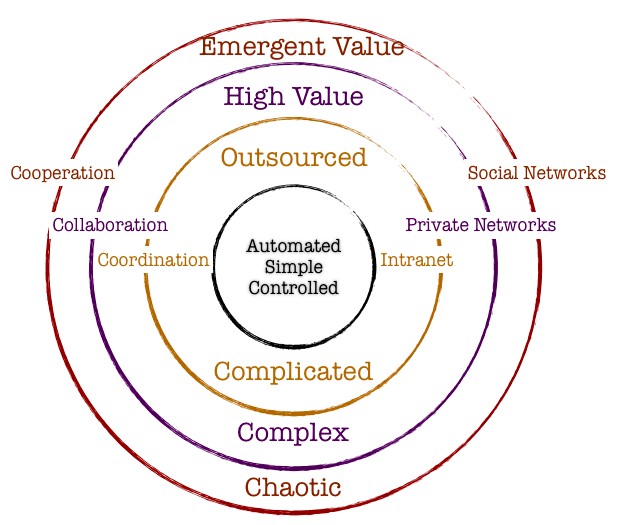

The truth is that chaos is not the enemy of scale. Chaos is the cost of momentum. Every startup that is truly growing at a pace inevitably creates complexity. Systems become tangled. Roles blur. Approvals drift. That is not failure. That is physics. What separates successful companies is not the absence of chaos but their ability to organize it.

I often compare this to managing a growing city. You do not stop new buildings from going up just because traffic worsens. You introduce traffic lights, zoning laws, and transit systems that support the growth. In finance, that means being ready to evolve processes as soon as growth introduces friction. It means designing modular systems where complexity is absorbed rather than resisted. You do not simplify the growth. You streamline the experience of growing. Read Scale by Geoffrey West. Much of my interest in complexity theory and architecture for scale comes from it. Also, look out for my book, which will be published in February 2026: Complexity and Scale: Managing Order from Chaos. This book aligns literature in complexity theory with the microeconomics of scaling vectors and enterprise architecture.

At a late-stage Series C company, the sales motion had shifted from land-and-expand to enterprise deals with multi-year terms and custom payment structures. The CPQ tool was unable to keep up. Rather than immediately overhauling the tool, they developed middleware logic that routed high-complexity deals through a streamlined approval process, while allowing low-risk deals to proceed unimpeded. The system scaled without slowing. Complexity still existed, but it no longer dictated pace.

Cash Discipline: The Ultimate Growth KPI

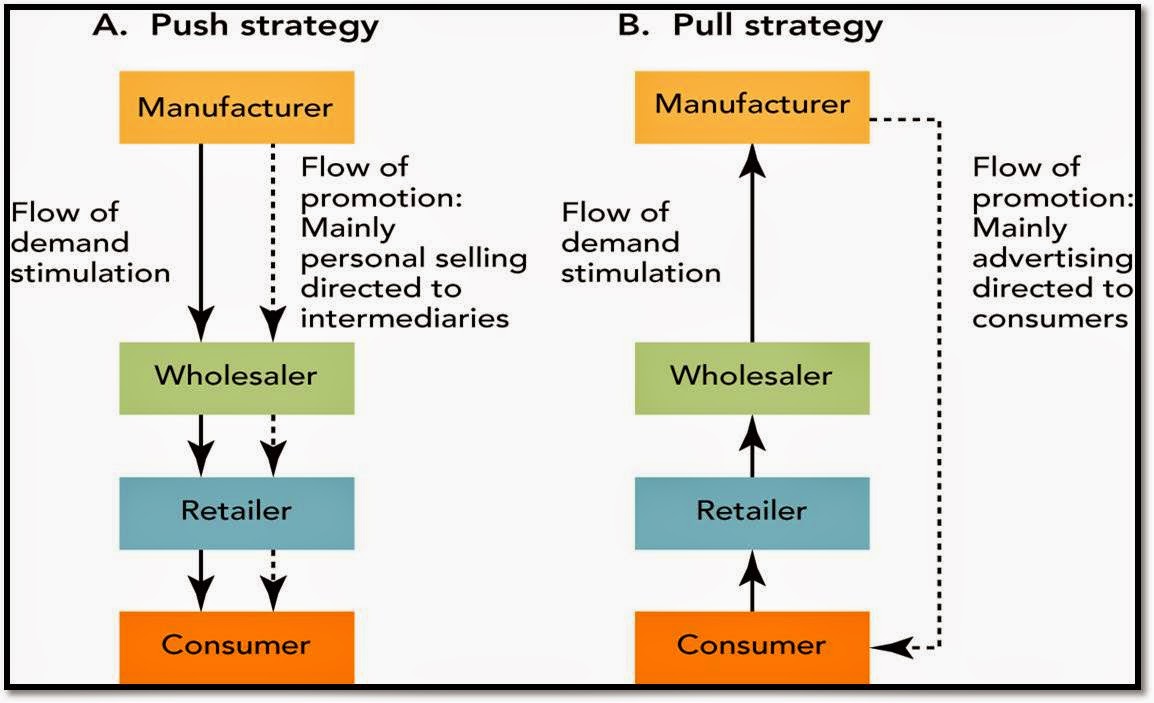

Cash is not just oxygen. It is alignment. When finance speaks early and often about burn efficiency, marginal unit economics, and working capital velocity, we move from gatekeepers to enablers. I often remind founders that the cost of sales is not just the commission plan. It’s in the way deals are structured. It’s in how fast a contract can be approved. It’s in how many hands a quote needs to pass through.

At one Series A professional services firm, they introduced a “Deal ROI Calculator” at the deal desk. It calculated not just price and term but implementation effort, support burden, and payback period. The result was staggering. Win rates remained stable, but average deal profitability increased by 17 percent. Sales teams began choosing deals differently. Finance was not saying no. It was saying, “Say yes, but smarter.”

Velocity is a Decision, Not a Circumstance

The best-run companies are not faster because they have fewer meetings. They are faster because decisions are closer to the data. Finance’s job is to put insight into the hands of those making the call. The goal is not to make perfect decisions. It is to make the best decision possible with the available data and revisit it quickly.

In one post-Series A firm, we embedded finance analysts inside revenue operations. It blurred the traditional lines but sped up decision-making. Discount approvals have been reduced from 48 hours to 12-24 hours. Pricing strategies became iterative. A finance analyst co-piloted the forecast and flagged gaps weeks earlier than our CRM did. It wasn’t about more control. It was about more confidence.

When Process Feels Like Progress

It is tempting to think that structure slows things down. However, the right QTC design can unlock margin, trust, and speed simultaneously. Imagine a deal desk that empowers sales to configure deals within prudent guardrails. Or a contract management workflow that automatically flags legal risks. These are not dreams. These are the functions we have implemented.

The companies that scale well are not perfect. But their finance teams understand that complexity compounds quietly. And so, we design our systems not to prevent chaos but to make good decisions routine. We don’t wait for the fire drill. We design out the fire.

Make Your Revenue Operations Your Secret Weapon

If your finance team still views sales operations as a reporting function, you are underutilizing a strategic lever. Revenue operations, when empowered, can close the gap between bookings and billings. They can forecast with precision. They can flag incentive misalignment. One of the best RevOps leaders I worked with used to say, “I don’t run reports. I run clarity.” That clarity was worth more than any point solution we bought.

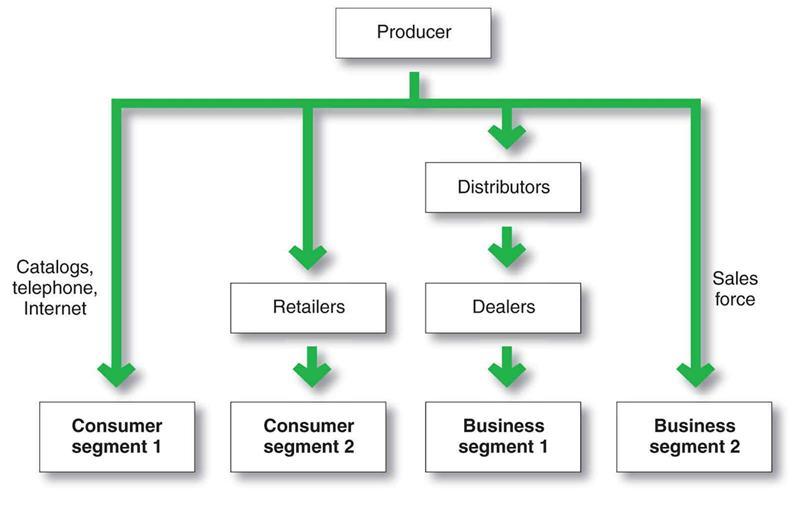

In scaling environments, automation is not optional. But automation alone does not save a broken process. Finance must own the blueprint. Every system, from CRM to CPQ to ERP, must speak the same language. Data fragmentation is not just annoying. It is value-destructive.

What Should You Do Now?

Ask yourself: Does finance have visibility into every step of the revenue funnel? Do our QTC processes support strategic flexibility? Is our deal desk a source of friction or a source of enablement? Can our sales comp plan be audited and justified in a board meeting without flinching?

These are not theoretical. They are the difference between Series C confusion and Series D confidence.

Let’s Make This Personal

I have seen incredible operators get buried under process debt because they mistook motion for progress. I have seen lean finance teams punch above their weight because they anchored their operating model in OKRs, cash efficiency, and rapid decision cycles. I have also seen the opposite. A sales ops function sitting in the corner. A deal desk no one trusts. A QTC process where no one knows who owns what.

These are fixable. But only if finance decides to lead. Not just report.

So here is my invitation. If you are a CFO, a CRO, a GC, or a CEO reading this, take one day this quarter to walk your revenue path from lead to cash. Sit with the people who feel the friction. Map the handoffs. And then ask, is this how we scale with control? Do you have the right processes in place? Do you have the technology to activate the process and minimize the friction?

How Strategic CFOs Drive Sustainable Growth and Change

When people ask me what the most critical relationship in a company really is, I always say it’s the one between the CEO and the CFO. And no, I am not being flippant. In my thirty years helping companies manage growth, navigate crises, and execute strategic shifts, the moments that most often determine success or spiraled failure often rests on how tightly the CEO and CFO operate together. One sets a vision. The other turns aspiration into action. Alone, each has influence; together, they can transform the business.

Transformation, after all, is not a project. It is a culture shift, a strategic pivot, a redefinition of operating behaviors. It’s more art than engineering and more people than process. And at the heart of it lies a fundamental tension: You need ambition, yet you must manage risk. You need speed, but you cannot abandon discipline. You must pursue new business models while preserving your legacy foundations. In short, you need to build simultaneously on forward momentum and backward certainty.

That complexity is where the strategic CFO becomes indispensable. The CFO’s job is not just to count beans, it’s to clear the ground where new plants can grow. To unlock capital without unleashing chaos. To balance accountable rigor with growth ambition. To design transformation from the numbers up, not just hammer it into the planning cycle. When this role is fulfilled, the CEO finds their most trusted confidante, collaborator, and catalyst.

Think of it this way. A CEO paints a vision: We must double revenue, globalize our go-to-market, pivot into new verticals, revamp the product, or embrace digital. It sounds exciting. It feels bold. But without a financial foundation, it becomes delusional. Does the company have the cash runway? Can the old cost base support the new trajectory? Are incentives aligned? Are the systems ready? Will the board nod or push back? Who is accountable if sales forecast misses or an integration falters? A CFO’s strategic role is to bring those questions forward not cynically, but constructively—so the ambition becomes executable.

The best CEOs I’ve worked with know this partnership instinctively. They build strategy as much with the CFO as with the head of product or sales. They reward honest challenge, not blind consensus. They request dashboards that update daily, not glossy decks that live in PowerPoint. They ask, “What happens to operating income if adoption slows? Can we reverse full-time hiring if needed? Which assumptions unlock upside with minimal downside?” Then they listen. And change. That’s how transformation becomes durable.

Let me share a story. A leader I admire embarked on a bold plan: triple revenue in two years through international expansion and a new channel model. The exec team loved the ambition. Investors cheered. But the CFO, without hesitation, did not say no. She said let us break it down. Suppose it costs $30 million to build international operations, $12 million to fund channel enablement, plus incremental headcount, marketing expenses, R&D coordination, and overhead. Let us stress test the plan. What if licensing stalls? What if fulfillment issues delay launches? What if cross-border tax burdens permanently drag down the margin?

The CEO wanted the bold headline number. But together, they translated it into executable modules. They set up rolling gates: a $5 million pilot, learn, fund next $10 million, learn, and so on. They built exit clauses. They aligned incentives so teams could pivot without losing credibility. They also built redundancy into systems and analytics, with daily data and optionality-based budgeting. The CEO had the vision, but the CFO gave it a frame. That is partnership.

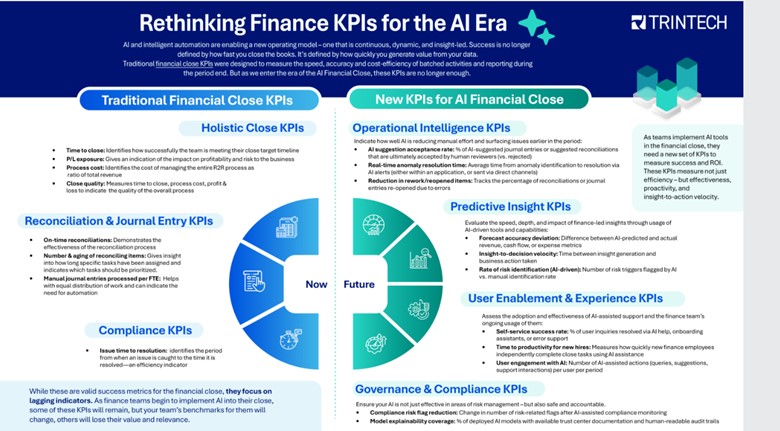

That framing role extends beyond capital structure or P&L. It bleeds into operating rhythm. The strategic CFO becomes the architect of transformation cadence. They design how weekly, monthly, and quarterly look and feel. They align incentive schemes so that geography may outperform globally while still holding central teams accountable. They align finance, people, product, and GTM teams to shared performance metrics—not top-level vanity metrics, but actionable ones: user engagement, cost per new customer, onboarding latency, support burden, renewal velocity. They ensure data is not stashed in silos. They make it usable, trusted, visible. Because transformation is only as effective as your ability to measure missteps, iterate, and learn.

This is why I say the CFO becomes a strategic weapon: a lever for insight, integration, and investment.

Boards understand this too, especially when it is too late. They see CEOs who talk of digital transformation while still approving global headcount hikes. They see operating legacy systems still dragging FY ‘Digital 2.0’ ambition. They see growth funded, but debt rising with little structural benefit. In those moments, they turn to the CFO. The board does not ask the CFO if they can deliver the numbers. They ask whether the CEO can. They ask, “What’s the downside exposure? What are the guardrails? Who is accountable? How long will transformation slow profitability? And can we reverse if needed?”

That board confidence, when positive, is not accidental. It comes from a CFO who built that trust, not by polishing a spreadsheet, but by building strategy together, testing assumptions early, and designing transformation as a financial system.

Indeed, transformation without control is just creative destruction. And while disruption may be trendy, few businesses survive without solid footing. The CFO ensures that disruption does not become destruction. That investments scale with impact. That flexibility is funded. That culture is not ignored. That when exceptions arise, they do not unravel behaviors, but refocus teams.

This is often unseen. Because finance is a support function, not a front-facing one. But consider this: it is finance that approves the first contract. Finance assists in setting the commission structure that defines behavior. Finance sets the credit policy, capital constraints, and invoice timing, and all of these have strategic logic. A CFO who treats each as a tactical lever becomes the heart of transformation.

Take forecasting. Transformation cannot run on backward-moving averages. Yet too many companies rely on year-over-year rates, lagged signals, and static targets. The strategic CFO resurrects forecasting. They bring forward leading indicators of product usage, sales pipeline, supply chain velocity. They reframe forecasts as living systems. We see a dip? We call a pivot meeting. We see high churn? We call the product team. We see hiring cost creep? We call HR. Forewarned is forearmed. That is transformation in flight.

On the capital front, the CFO becomes a barbell strategist. They pair patient growth funding with disciplined structure. They build in fields of optionality: reserves for opportunistic moves, caps on unfunded headcount, staged deployment, and scalable contracts. They calibrate pricing experiments. They design customer acquisition levers with off ramps. They ensure that at every step of change, you can set a gear to reverse—without losing momentum, but with discipline.

And they align people. Transformation hinges on mindset. In fast-moving companies, people often move faster than they think. Great leaders know this. The strategic CFO builds transparency into compensation. They design equity vesting tied to transformation metrics. They design long-term incentives around cross-functional execution. They also design local authority within discipline. Give leaders autonomy, but align them to the rhythm of finance. Even the best strategy dies when every decision is a global approval. Optionality must scale with coordination.

Risk management transforms too. In the past, the CFO’s role in transformation was to shield operations from political turbulence. Today, it is to internally amplify controlled disruption. That means modeling volatility with confidence. Scenario modeling under market shock, regulatory shift, customer segmentation drift. Not just building firewalls, but designing escape ramps and counterweights. A transformation CFO builds risk into transformation—but as a system constraint to be managed, not a gate to prevent ambition.

I once had a CEO tell me they felt alone when delivering digital transformation. HR was not aligned. Product was moving too slowly. Sales was pushing legacy business harder. The CFO had built a bridge. They brought HR, legal, sales, and marketing into weekly update sessions, each with agreed metrics. They brokered resolutions. They surfaced trade-offs confidently. They pressed accountability floor—not blame, but clarity. That is partnership. That is transformation armor.

Transformation also triggers cultural tectonics. And every tectonic shift features friction zones—power renegotiation, process realignment, work redesign. Without financial discipline, politics wins. Mistrust builds. Change derails. The strategic CFO intervenes not as a policeman, but as an arbiter of fairness: If people are asked to stretch, show them the ROI. If processes migrate, show them the rationale. If roles shift, unpack the logic. Maintaining trust alignment during transformation is as important as securing funding.

The ability to align culture, capital, cadence, and accountability around a single north star—that is the strategic CFO’s domain.

And there is another hidden benefit: the CFO’s posture sets the tone for transformation maturity. CFOs who co-create, co-own, and co-pivot build transformation muscle. Those companies that learn together scale transformation together.

I once wrote that investors will forgive a miss if the learning loops are obvious. That is also true inside the company. When a CEO and CFO are aligned, and the CFO is the first to acknowledge what is not working to expectations, when pivots are driven by data rather than ego, that establishes the foundation for resilient leadership. That is how companies rebuild trust in growth every quarter. That is how transformation becomes a norm.

If there is a fear inside the CFO community, it is the fear of being visible. A CFO may believe that financial success is best served quietly. But the moment they step confidently into transformation, they change that dynamic. They say: Yes, we own the books. But we also own the roadmap. Yes, we manage the tail risk. But we also amplify the tail opportunity. That mindset is contagious. It builds confidence across the company and among investors. That shift in posture is more valuable than any forecast.

So let me say it again. Strategy is not a plan. Mechanics do not make execution. Systems do. And at the junction of vision and execution, between boardroom and frontline, stands the CFO. When transformation is on the table, the CFO walks that table from end to end. They make sure the chairs are aligned. The evidence is available. The accountability is shared. The capital is allocated, measured, and adapted.

This is why I refer to the CFO as the CEO’s most important ally. Not simply a confidante. Not just a number-cruncher. A partner in purpose. A designer of execution. A steward of transformation. Which is why, if you are a CFO reading this, I encourage you: step forward. You do not need permission to rethink transformation. You need conviction to shape it. And if you can build clarity around capital, establish a cadence for metrics, align incentives, and implement systems for governance, you will make your CEO’s job easier. You will elevate your entire company. You will unlock optionality not just for tomorrow, but for the years that follow. Because in the end, true transformation is not a moment. It is a movement. And the CFO, when prepared, can lead it.

The Power of Customer Lifetime Value in Modern Business

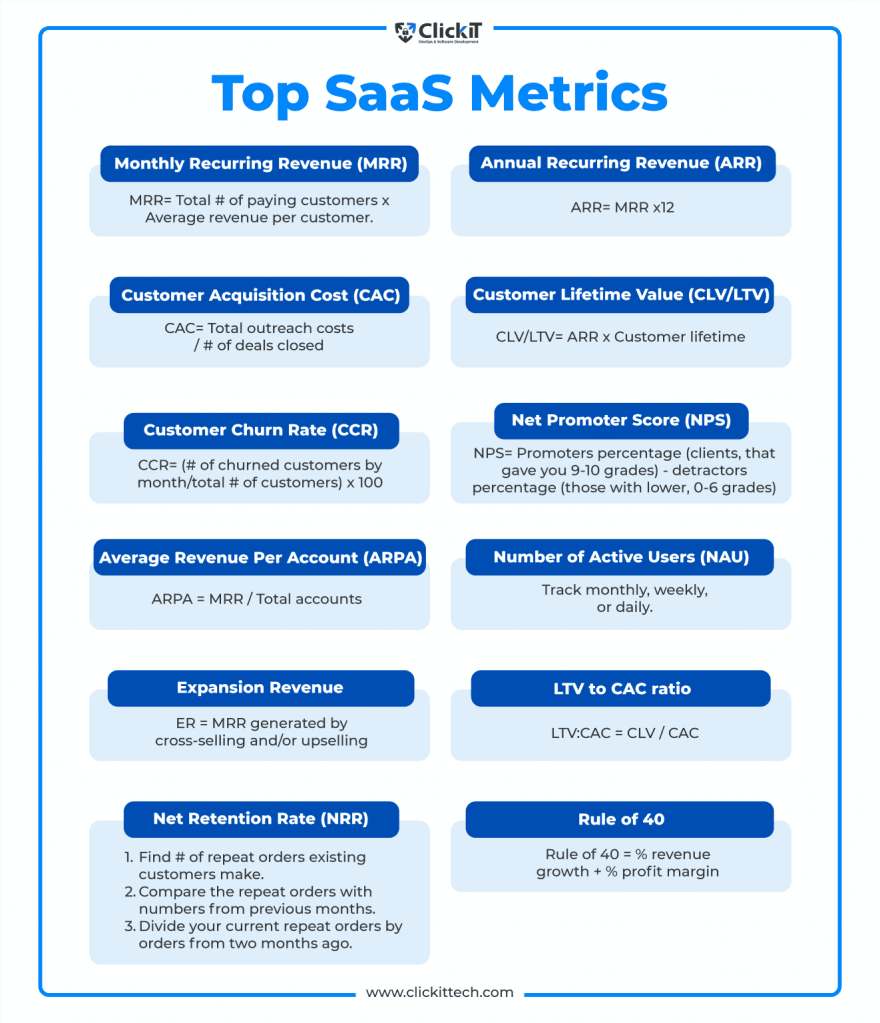

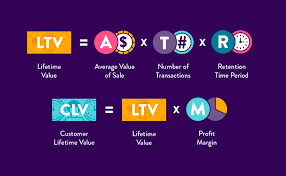

In contemporary business discourse, few metrics carry the strategic weight of Customer Lifetime Value (CLV). CLV and CAC are prime metrics. For modern enterprises navigating an era defined by digital acceleration, subscription economies, and relentless competition, CLV represents a unifying force, uniting finance, marketing, and strategy into a single metric that measures not only transactions but also the value of relationships. Far more than a spreadsheet calculation, CLV crystallizes lifetime revenue, loyalty, referral impact, and long-term financial performance into a quantifiable asset.

This article explores CLV’s origins, its mathematical foundations, its role as a strategic North Star across organizational functions, and the practical systems required to integrate it fully into corporate culture and capital allocation. It also highlights potential pitfalls and ethical implications.

I. CLV as a Cross-Functional Metric

CLV evolved from a simple acknowledgement: not all customers are equally valuable, and many businesses would prosper more by nurturing relationships than chasing clicks. The transition from single-sale tallies to lifetime relationship value gained momentum with the rise of subscription models—telecom plans, SaaS platforms, and membership programs—where the fiscal significance of recurring revenue became unmistakable.

This shift reframed capital deployment and decision-making:

- Marketing no longer seeks volume unquestioningly but targets segments with high long-term value.

- Finance integrates CLV into valuation models and capital allocation frameworks.

- Strategy uses it to guide M&A decisions, pricing stratagems, and product roadmap prioritization.

Because CLV is simultaneously a financial measurement and a customer-centric tool, it builds bridges—translating marketing activation into board-level impact.

II. How to calculate CLV

At its core, CLV employs economic modeling similar to net present value. A basic formula:

CLV = ∑ (t=0 to T) [(Rt – Ct) / (1 + d)^t]

- Rt = revenue generated at time t

- Ct = cost to serve/acquire at time t

- d = discount rate

- T = time horizon

This anchors CLV in well-accepted financial principles: discounted future cash flows, cost allocation, and multi-period forecasting. It satisfies CFO requirements for rigor and measurability.

However, marketing leaders often expand this to capture:

- Referral value (Rt includes not just direct sales, but influenced purchases)

- Emotional or brand-lift dimensions (e.g., window customers who convert later)

- Upselling, cross-selling, and tiered monetization over time

These expansions refine CLV into a dynamic forecast rather than a static average—one that responds to segmentation and behavioral triggers.

III. CLV as a Board-Level Metric

A. Investment and Capital Prioritization

Traditional capital decisions rely on ROI, return on invested capital (ROIC), and earnings multiples. CLV adds nuance: it gauges not only immediate returns but extended client relationships. This enables an expanded view of capital returns.

For example, a company might shift budget from low-CLV acquisition channels to retention-focused strategies—investing more in on-boarding, product experience, or customer success. These initiatives, once considered costs, now become yield-generating assets.

B. Segment-Based Acquisition

CLV enables precision targeting. A segment that delivers a 6:1 lifetime value-to-acquisition-cost (LTV:CAC) ratio is clearly more valuable than one delivering 2:1. Marketing reallocates spend accordingly, optimizing strategic segmentation and media mix, tuning messaging for high-value cohorts.

Because CLV is quantifiable and forward-looking, it naturally aligns marketing decisions with shareholder-driven metrics.

C. Tiered Pricing and Customer Monetization

CLV is also central to monetization strategy. Churn, upgrade rates, renewal behaviors, and pricing power all can be evaluated through the lens of customer value over time. Versioning, premium tiers, loyalty benefits—all become levers to maximize lifetime value. Finance and strategy teams model these scenarios to identify combinations that yield optimal returns.

D. Strategic Partnerships and M&A

CLV informs deeper decisions about partnerships and mergers. In evaluating a potential platform acquisition, projected contribution to overall CLV may be a decisive factor, especially when combined customer pools or cross-sell ecosystems can amplify lifetime revenue. It embeds customer value insights into due diligence and valuation calculations.

IV. Organizational Integration: A Strategic Imperative

Effective CLV deployment requires more than good analytics—it demands structural clarity and cultural alignment across three key functions.

A. Finance as Architect

Finance teams frame the assumptions—discount rates, cost allocation, margin calibration—and embed CLV into broader financial planning and analysis. Their task: convert behavioral data and modeling into company-wide decision frameworks used in investment reviews, budgeting, and forecasting processes.

B. Marketing as Activation Engine

Marketing owns customer acquisition, retention campaigns, referral programs, and product messaging. Their role is to feed the CLV model with real data: conversion rates, churn, promotion impact, and engagement flows. In doing so, campaigns become precision tools tuned to maximize customer yield rather than volume alone.

C. Strategy as Systems Designer

The strategy team weaves CLV outputs into product roadmaps, pricing strategy, partnership design, and geographic expansion. Using CLV foliated by cohort and channel, strategy leaders can sequence investments to align with long-term margin objectives—such as a five-year CLV-driven revenue mix.

V. Embedding CLV Into Corporate Processes

The following five practices have proven effective at embedding CLV into organizational DNA:

- Executive Dashboards

Incorporate LTV:CAC ratios, cohort retention rates, and segment CLV curves into executive reporting cycles. Tie leadership incentives (e.g., bonuses, compensation targets) to long-term value outcomes. - Cross-Functional CLV Cells

Establish CLV analytics teams staffed by finance, marketing insights, and data engineers. They own CLV modeling, simulation, and distribution across functions. - Monthly CLV Reviews

Monthly orchestration meetings integrate metrics updates, marketing feedback on campaigns, pricing evolution, and retention efforts. Simultaneous adjustment across functions allows dynamic resource allocation. - Capital Allocation Gateways

Projects involving customer-facing decisions—from new products to geographic pullbacks—must include CLV impact assessments in gating criteria. These can also feed into product investment requests and ROI thresholds. - Continuous Learning Loops

CLV models must be updated with actual lifecycle data. Regular recalibration fosters learning from retention behaviors, pricing experiments, churn drivers, and renewal rates—fueling confidence in incremental decision-making.

VI. Caveats and Limitations

CLV, though powerful, is not a cure-all. These caveats merit attention:

- Data Quality: Poorly integrated systems, missing customer identifiers, or inconsistent cohort logic can produce misleading CLV metrics.

- Assumption Risk: Discount rates, churn decay, turnaround behavior—all are model assumptions. Unqualified confidence can mislead investment.

- Narrow Focus: High CLV may chronically favor established segments, leaving growth through new markets or products underserved.

- Over-Targeting Risk: Over-optimizing for short-term yield may harm brand reputation or equity with broader audiences.

Therefore, CLV must be treated with humility—an advanced tool requiring discipline in measurement, calibration, and multi-dimensional insight.

VII. The Influence of Digital Ecosystems

Modern digital ecosystems deliver immense granularity. Every interaction—click, open, referral, session length—is measurable. These dark data provide context for CLV testing, segment behavior, and risk triggers.

However, this scale introduces overfitting risk: spurious correlations may override structural signals. Successful organizations maintain a balance—leveraging high-frequency signals for short-cycle interventions, while retaining medium-term cohort logic for capital allocation and strategic initiatives.

VIII. Ethical and Brand Implications

“CLV”, when viewed through a values lens, also becomes a cultural and ethical marker. Decisions informed by CLV raise questions:

- To what extent should a business monetize a cohort? Is excessive monetization ethical?

- When loyalty programs disproportionately reward high-value customers, does brand equity suffer among moderate spenders?

- When referral bonuses attract opportunists rather than advocates, is brand authenticity compromised?

These considerations demand that CLV strategies incorporate brand and ethical governance, not just financial optimization.

IX. Cross-Functionally Harmonized Governance

A robust operating model to sustain CLV alignment should include:

- Structured Metrics Governance: Common cohort definitions, discount rates, margin allocation, and data timelines maintained under joint sponsorship.

- Integrated Information Architecture: Real-time reporting, defined data lineage (acquisition to LTV), and cross-functional access.

- Quarterly Board Oversight: Board-level dashboards that track digital customer performance and CLV trends as fundamental risk and opportunity signals.

- Ethical Oversight Layer: Cross-functional reviews ensuring CLV-driven decisions don’t undermine customer trust or brand perception.

X. CLV as Strategic Doctrine

When deployed with discipline, CLV becomes more than a metric—it becomes a cultural doctrine. The essential tenets are:

- Time horizon focus: orienting decisions toward lifetime impact rather than short-cycle transactions.

- Cross-functional governance: embedding CLV into finance, marketing, and strategy with shared accountability.

- Continuous recalibration: creating feedback loops that update assumptions and reinforce trust in the metric.

- Ethical stewardship: ensuring customer relationships are respected, brand equity maintained, and monetization balanced.

With that foundation, CLV can guide everything from media budgets and pricing plans to acquisition strategy and market expansion.

Conclusion

In an age where customer relationships define both resilience and revenue, Customer Lifetime Value stands out as an indispensable compass. It unites finance’s need for systematic rigor, marketing’s drive for relevance and engagement, and strategy’s mandate for long-term value creation. When properly modeled, governed, and governed ethically, CLV enables teams to shift from transactional quarterly mindsets to lifetime portfolios—transforming customers into true franchise assets.

For any organization aspiring to mature its performance, CLV is the next frontier. Not just a metric on a dashboard—but a strategic mechanism capable of aligning functions, informing capital allocation, shaping product trajectories, elevating brand meaning, and forging relationships that transcend a single transaction.

Navigating Startup Growth: Adapting Your Operating Model Every Year

If a startup’s journey can be likened to an expedition up Everest, then its operating model is the climbing gear—vital, adaptable, and often revised. In the early stages, founders rely on grit and flexibility. But as companies ascend and attempt to scale, they face a stark and simple truth: yesterday’s systems are rarely fit for tomorrow’s challenges. The premise of this memo is equally stark: your operating model must evolve—consciously and structurally—every 12 months if your company is to scale, thrive, and remain relevant.

This is not a speculative opinion. It is a necessity borne out by economic theory, pattern recognition, operational reality, and the statistical arc of business mortality. According to a 2023 McKinsey report, only 1 in 200 startups make it to $100M in revenue, and even fewer become sustainably profitable. The cliff isn’t due to product failure alone—it’s largely an operational failure to adapt at the right moment. Let’s explore why.

1. The Law of Exponential Complexity

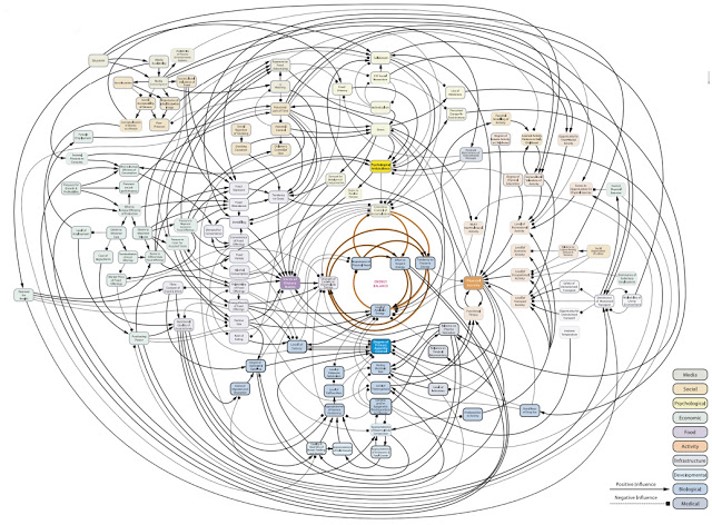

Startups begin with a high signal-to-noise ratio. A few people, one product, and a common purpose. Communication is fluid, decision-making is swift, and adjustments are frequent. But as the team grows from 10 to 50 to 200, each node adds complexity. If you consider the formula for potential communication paths in a group—n(n-1)/2—you’ll find that at 10 employees, there are 45 unique interactions. At 50? That number explodes to 1,225.

This isn’t just theory. Each of those paths represents a potential decision delay, misalignment, or redundancy. Without an intentional redesign of how information flows, how priorities are set, and how accountability is structured, the weight of complexity crushes velocity. An operating model that worked flawlessly in Year 1 becomes a liability in Year 3.

Lesson: The operating model must evolve to actively simplify while the organization expands.

2. The 4 Seasons of Growth

Companies grow in phases, each requiring different operating assumptions. Think of them as seasons:

| Stage | Key Focus | Operating Model Needs |

|---|---|---|

| Start-up | Product-Market Fit | Agile, informal, founder-centric |

| Early Growth | Customer Traction | Lean teams, tight loops, scalable GTM |

| Scale-up | Repeatability | Functional specialization, metrics |

| Expansion | Market Leadership | Cross-functional governance, systems |

At each transition, the company must answer: What must we centralize vs. decentralize? What metrics now matter? Who owns what? A model that optimizes for speed in Year 1 may require guardrails in Year 2. And in Year 3, you may need hierarchy—yes, that dreaded word among startups—to maintain coherence.

Attempting to scale without rethinking the model is akin to flying a Cessna into a hurricane. Many try. Most crash.

3. From Hustle to System: Institutionalizing What Works

Founders often resist operating models because they evoke bureaucracy. But bureaucracy isn’t the issue—entropy is. As the organization grows, systems prevent chaos. A well-crafted operating model does three things:

- Defines governance – who decides what, when, and how.

- Aligns incentives – linking strategy, execution, and rewards.

- Enables measurement – providing real-time feedback on what matters.

Let’s take a practical example. In the early days, a product manager might report directly to the CEO and also collaborate closely with sales. But once you have multiple product lines and a sales org with regional P&Ls, that old model breaks. Now you need Product Ops. You need roadmap arbitration based on capacity planning, not charisma.

Translation: Institutionalize what worked ad hoc by architecting it into systems.

4. Why Every 12 Months? The Velocity Argument

Why not every 24 months? Or every 6? The 12-month cadence is grounded in several interlocking reasons:

- Business cycles: Most companies operate on annual planning rhythms. You set targets, budget resources, and align compensation yearly. The operating model must match that cadence or risk misalignment.

- Cultural absorption: People need time to digest one operating shift before another is introduced. Twelve months is the Goldilocks zone—enough to evaluate results but not too long to become obsolete.

- Market feedback: Every year brings fresh feedback from the market, investors, customers, and competitors. If your operating model doesn’t evolve in step, you’ll lose your edge—like a boxer refusing to switch stances mid-fight.

And then there’s compounding. Like interest on capital, small changes in systems—when made annually—compound dramatically. Optimize decision velocity by 10% annually, and in 5 years, you’ve doubled it. Delay, and you’re crushed by organizational debt.

5. The Operating Model Canvas

To guide this evolution, we recommend using a simplified Operating Model Canvas—a strategic tool that captures the six dimensions that must evolve together:

| Dimension | Key Questions |

|---|---|

| Structure | How are teams organized? What’s centralized? |

| Governance | Who decides what? What’s the escalation path? |

| Process | What are the key workflows? How do they scale? |

| People | Do roles align to strategy? How do we manage talent? |

| Technology | What systems support this stage? Where are the gaps? |

| Metrics | Are we measuring what matters now vs. before? |

Reviewing and recalibrating these dimensions annually ensures that the foundation evolves with the building. The alternative is often misalignment, where strategy runs ahead of execution—or worse, vice versa.

6. Case Studies in Motion: Lessons from the Trenches

a. Slack (Pre-acquisition)

In Year 1, Slack’s operating model emphasized velocity of product feedback. Engineers spoke to users directly, releases shipped weekly, and product decisions were founder-led. But by Year 3, with enterprise adoption rising, the model shifted: compliance, enterprise account teams, and customer success became core to the GTM motion. Without adjusting the operating model to support longer sales cycles and regulated customer needs, Slack could not have grown to a $1B+ revenue engine.

b. Airbnb

Initially, Airbnb’s operating rhythm centered on peer-to-peer UX. But as global regulatory scrutiny mounted, they created entirely new policy, legal, and trust & safety functions—none of which were needed in Year 1. Each year, Airbnb re-evaluated what capabilities were now “core” vs. “context.” That discipline allowed them to survive major downturns (like COVID) and rebound.

c. Stripe

Stripe invested heavily in internal tooling as they scaled. Recognizing that developer experience was not only for customers but also internal teams, they revised their internal operating platforms annually—often before they were broken. The result: a company that scaled to serve millions of businesses without succumbing to the chaos that often plagues hypergrowth.

7. The Cost of Inertia

Aging operating models extract a hidden tax. They confuse new hires, slow decisions, demoralize high performers, and inflate costs. Worse, they signal stagnation. In a landscape where capital efficiency is paramount (as underscored in post-2022 venture dynamics), bloated operating models are a death knell.

Consider this: According to Bessemer Venture Partners, top quartile SaaS companies show Rule of 40 compliance with fewer than 300 employees per $100M of ARR. Those that don’t? Often have twice the headcount with half the profitability—trapped in models that no longer fit their stage.

8. How to Operationalize the 12-Month Reset

For practical implementation, I suggest a 12-month Operating Model Review Cycle:

| Month | Focus Area |

|---|---|

| Jan | Strategic planning finalization |

| Feb | Gap analysis of current model |

| Mar | Cross-functional feedback loop |

| Apr | Draft new operating model vNext |

| May | Review with Exec Team |

| Jun | Pilot model changes |

| Jul | Refine and communicate broadly |

| Aug | Train managers on new structures |

| Sep | Integrate into budget planning |

| Oct | Lock model into FY plan |

| Nov | Run simulations/test governance |

| Dec | Prepare for January launch |

This cycle ensures that your org model does not lag behind your strategic ambition. It also sends a powerful cultural signal: we evolve intentionally, not reactively.

Conclusion: Be the Architect, Not the Archaeologist

Every successful company is, at some level, a systems company. Apple is as much about its supply chain as its design. Amazon is a masterclass in operating cadence. And Salesforce didn’t win by having a better CRM—it won by continuously evolving its go-to-market and operating structure.

To scale, you must be the architect of your company’s operating future—not an archaeologist digging up decisions made when the world was simpler.

So I leave you with this conviction: operating models are not carved in stone—they are coded in cycles. And the companies that win are those that rewrite that code every 12 months—with courage, with clarity, and with conviction.

Precision at Scale: How to Grow Without Drowning in Complexity

In business, as in life, scale is seductive. It promises more of the good things—revenue, reach, relevance. But it also invites something less welcome: complexity. And the thing about complexity is that it doesn’t ask for permission before showing up. It simply arrives, unannounced, and tends to stay longer than you’d like.

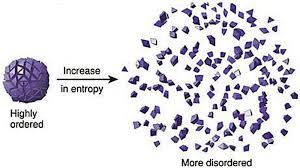

As we pursue scale, whether by growing teams, expanding into new markets, or launching adjacent product lines, we must ask a question that seems deceptively simple: how do we know we’re scaling the right way? That question is not just philosophical—it’s deeply economic. The right kind of scale brings leverage. The wrong kind brings entropy.

Now, if I’ve learned anything from years of allocating capital, it is this: returns come not just from growth, but from managing the cost and coordination required to sustain that growth. In fact, the most successful enterprises I’ve seen are not the ones that scaled fastest. They’re the ones that scaled precisely. So, let’s get into how one can scale thoughtfully, without overinvesting in capacity, and how to tell when the system you’ve built is either flourishing or faltering.

To begin, one must understand that scale and complexity do not rise in parallel; complexity has a nasty habit of accelerating. A company with two teams might have a handful of communication lines. Add a third team, and you don’t just add more conversations—you add relationships between every new and existing piece. In engineering terms, it’s a combinatorial explosion. In business terms, it’s meetings, misalignment, and missed expectations.

Cities provide a useful analogy. When they grow in population, certain efficiencies appear. Infrastructure per person often decreases, creating cost advantages. But cities also face nonlinear rises in crime, traffic, and disease—all manifestations of unmanaged complexity. The same is true in organizations. The system pays a tax for every additional node, whether that’s a service, a process, or a person. That tax is complexity, and it compounds.

Knowing this, we must invest in capacity like we would invest in capital markets—with restraint and foresight. Most failures in capacity planning stem from either a lack of preparation or an excess of confidence. The goal is to invest not when systems are already breaking, but just before the cracks form. And crucially, to invest no more than necessary to avoid those cracks.

Now, how do we avoid overshooting? I’ve found that the best approach is to treat capacity like runway. You want enough of it to support takeoff, but not so much that you’ve spent your fuel on unused pavement. We achieve this by investing in increments, triggered by observable thresholds. These thresholds should be quantitative and predictive—not merely anecdotal. If your servers are running at 85 percent utilization across sustained peak windows, that might justify additional infrastructure. If your engineering lead time starts rising despite team growth, it suggests friction has entered the system. Either way, what you’re watching for is not growth alone, but whether the system continues to behave elegantly under that growth.

Elegance matters. Systems that age well are modular, not monolithic. In software, this might mean microservices that scale independently. In operations, it might mean regional pods that carry their own load, instead of relying on a centralized command. Modular systems permit what I call “selective scaling”—adding capacity where needed, without inflating everything else. It’s like building a house where you can add another bedroom without having to reinforce the foundation. That kind of flexibility is worth gold.

Of course, any good decision needs a reliable forecast behind it. But forecasting is not about nailing the future to a decimal point. It is about bounding uncertainty. When evaluating whether to scale, I prefer forecasts that offer a range—base, best, and worst-case scenarios—and then tie investment decisions to the 75th percentile of demand. This ensures you’re covering plausible upside without betting on the moon.

Let’s not forget, however, that systems are only as good as the signals they emit. I’m wary of organizations that rely solely on lagging indicators like revenue or margin. These are important, but they are often the last to move. Leading indicators—cycle time, error rates, customer friction, engineer throughput—tell you much sooner whether your system is straining. In fact, I would argue that latency, broadly defined, is one of the clearest signs of stress. Latency in delivery. Latency in decisions. Latency in feedback. These are the early whispers before systems start to crack.

To measure whether we’re making good decisions, we need to ask not just if outcomes are improving, but if the effort to achieve them is becoming more predictable. Systems with high variability are harder to scale because they demand constant oversight. That’s a recipe for executive burnout and organizational drift. On the other hand, systems that produce consistent results with declining variance signal that the business is not just growing—it’s maturing.

Still, even the best forecasts and the finest metrics won’t help if you lack the discipline to say no. I’ve often told my teams that the most underrated skill in growth is the ability to stop. Stopping doesn’t mean failure; it means the wisdom to avoid doubling down when the signals aren’t there. This is where board oversight matters. Just as we wouldn’t pour more capital into an underperforming asset without a turn-around plan, we shouldn’t scale systems that aren’t showing clear returns.

So when do we stop? There are a few flags I look for. The first is what I call capacity waste—resources allocated but underused, like a datacenter running at 20 percent utilization, or a support team waiting for tickets that never come. That’s not readiness. That’s idle cost. The second flag is declining quality. If error rates, customer complaints, or rework spike following a scale-up, then your complexity is outpacing your coordination. Third, I pay attention to cognitive load. When decision-making becomes a game of email chains and meeting marathons, it’s time to question whether you’ve created a machine that’s too complicated to steer.

There’s also the budget creep test. If your capacity spending increases by more than 10 percent quarter over quarter without corresponding growth in throughput, you’re not scaling—you’re inflating. And in inflation, as in business, value gets diluted.

One way to guard against this is by treating architectural reserves like financial ones. You wouldn’t deploy your full cash reserve just because an opportunity looks interesting. You’d wait for evidence. Similarly, system buffers should be sized relative to forecast volatility, not organizational ambition. A modest buffer is prudent. An oversized one is expensive insurance.

Some companies fall into the trap of building for the market they hope to serve, not the one they actually have. They build as if the future were guaranteed. But the future rarely offers such certainty. A better strategy is to let the market pull capacity from you. When customers stretch your systems, then you invest. Not because it’s a bet, but because it’s a reaction to real demand.

There’s a final point worth making here. Scaling decisions are not one-time events. They are sequences of bets, each informed by updated evidence. You must remain agile enough to revise the plan. Quarterly evaluations, architectural reviews, and scenario testing are the boardroom equivalent of course correction. Just as pilots adjust mid-flight, companies must recalibrate as assumptions evolve.

To bring this down to earth, let me share a brief story. A fintech platform I advised once found itself growing at 80 percent quarter over quarter. Flush with success, they expanded their server infrastructure by 200 percent in a single quarter. For a while, it worked. But then something odd happened. Performance didn’t improve. Latency rose. Error rates jumped. Why? Because they hadn’t scaled the right parts. The orchestration layer, not the compute layer, was the bottleneck. Their added capacity actually increased system complexity without solving the real issue. It took a re-architecture, and six months of disciplined rework, to get things back on track. The lesson: scaling the wrong node is worse than not scaling at all.

In conclusion, scale is not the enemy. But ungoverned scale is. The real challenge is not growth, but precision. Knowing when to add, where to reinforce, and—perhaps most crucially—when to stop. If we build systems with care, monitor them with discipline, and remain intellectually honest about what’s working, we give ourselves the best chance to grow not just bigger, but better.

And that, to borrow a phrase from capital markets, is how you compound wisely.

Bias and Error: Human and Organizational Tradeoff

“I spent a lifetime trying to avoid my own mental biases. A.) I rub my own nose into my own mistakes. B.) I try and keep it simple and fundamental as much as I can. And, I like the engineering concept of a margin of safety. I’m a very blocking and tackling kind of thinker. I just try to avoid being stupid. I have a way of handling a lot of problems — I put them in what I call my ‘too hard pile,’ and just leave them there. I’m not trying to succeed in my ‘too hard pile.’” : Charlie Munger — 2020 CalTech Distinguished Alumni Award interview

Bias is a disproportionate weight in favor of or against an idea or thing, usually in a way that is closed-minded, prejudicial, or unfair. Biases can be innate or learned. People may develop biases for or against an individual, a group, or a belief. In science and engineering, a bias is a systematic error. Statistical bias results from an unfair sampling of a population, or from an estimation process that does not give accurate results on average.

Error refers to a outcome that is different from reality within the context of the objective function that is being pursued.

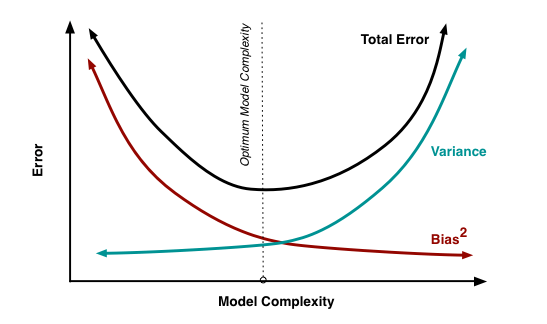

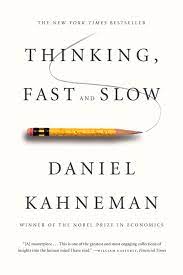

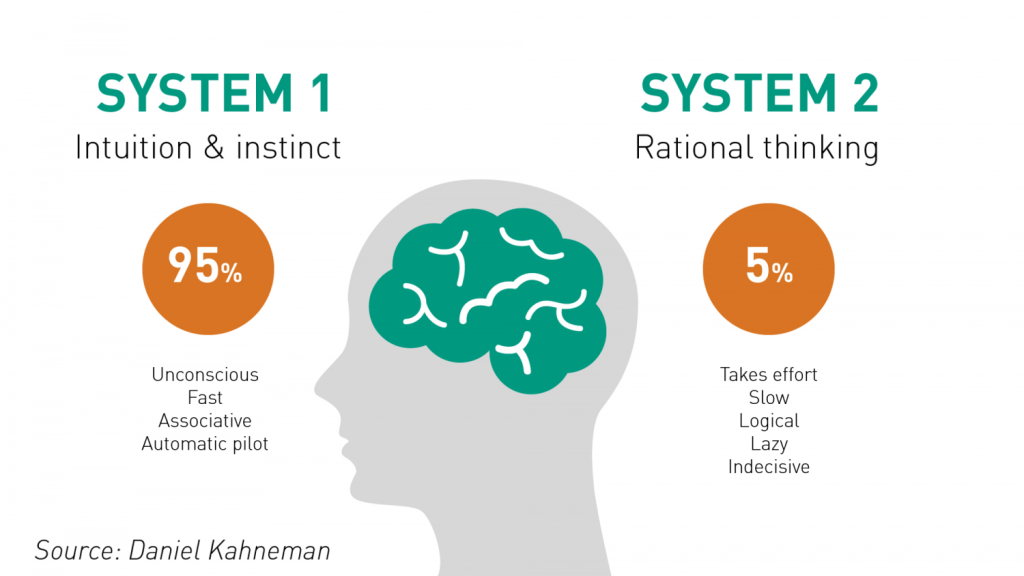

Thus, I would like to think that the Bias is a process that might lead to an Error. However, that is not always the case. There are instances where a bias might get you to an accurate or close to an accurate result. Is having a biased framework always a bad thing? That is not always the case. From an evolutionary standpoint, humans have progressed along the dimension of making rapid judgements – and much of them stemming from experience and their exposure to elements in society. Rapid judgements are typified under the System 1 judgement (Kahneman, Tversky) which allows bias and heuristic to commingle to effectively arrive at intuitive decision outcomes.

And again, the decision framework constitutes a continually active process in how humans or/and organizations execute upon their goals. It is largely an emotional response but could just as well be an automated response to a certain stimulus. However, there is a danger prevalent in System 1 thinking: it might lead one to comfortably head toward an outcome that is seemingly intuitive, but the actual result might be significantly different and that would lead to an error in the judgement. In math, you often hear the problem of induction which establishes that your understanding of a future outcome relies on the continuity of the past outcomes, and that is an errant way of thinking although it still represents a useful tool for us to advance toward solutions.

System 2 judgement emerges as another means to temper the more significant variabilities associated with System 1 thinking. System 2 thinking represents a more deliberate approach which leads to a more careful construct of rationale and thought. It is a system that slows down the decision making since it explores the logic, the assumptions, and how the framework tightly fits together to test contexts. There are a more lot more things at work wherein the person or the organization has to invest the time, focus the efforts and amplify the concentration around the problem that has to be wrestled with. This is also the process where you search for biases that might be at play and be able to minimize or remove that altogether. Thus, each of the two Systems judgement represents two different patterns of thinking: rapid, more variable and more error prone outcomes vs. slow, stable and less error prone outcomes.

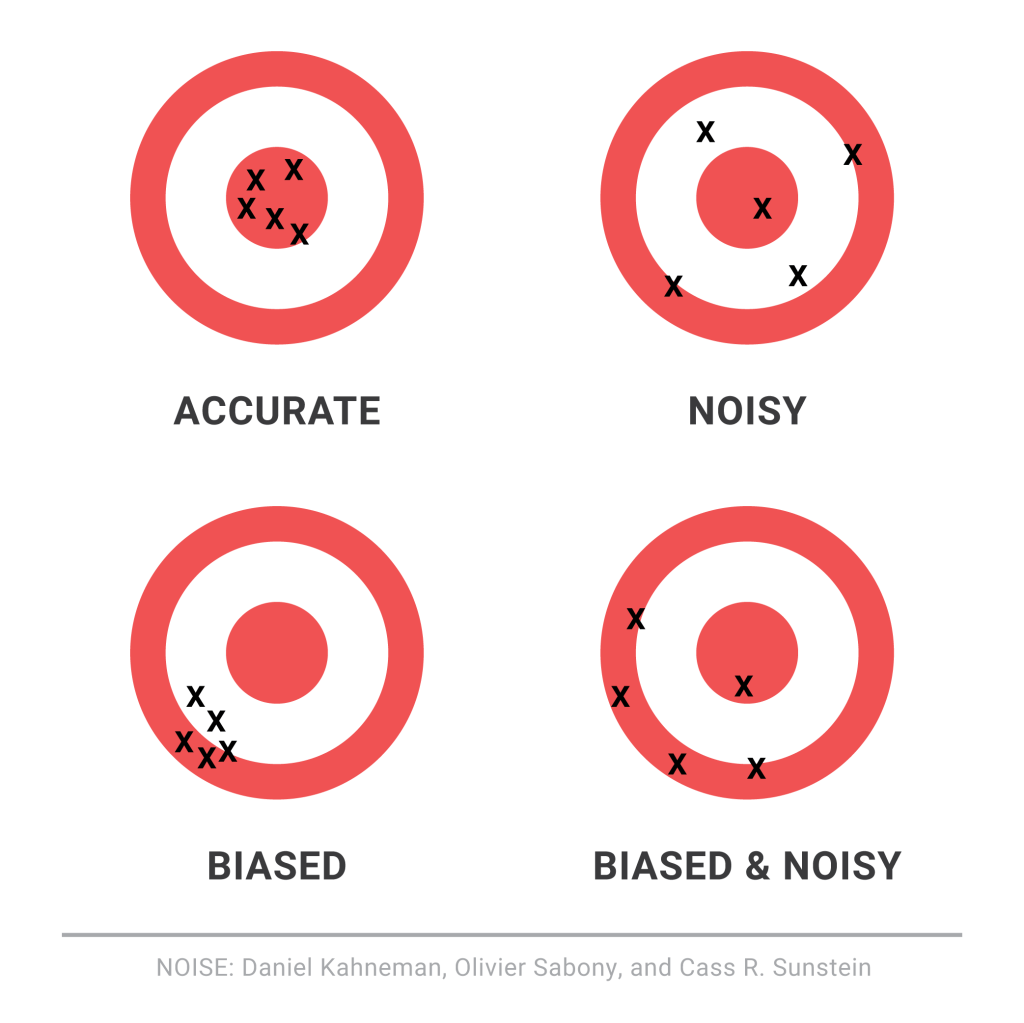

So let us revisit the Bias vs. Variance tradeoff. The idea is that the more bias you bring to address a problem, there is less variance in the aggregate. That does not mean that you are accurate. It only means that there is less variance in the set of outcomes, even if all of the outcomes are materially wrong. But it limits the variance since the bias enforces a constraint in the hypotheses space leading to a smaller and closely knit set of probabilistic outcomes. If you were to remove the constraints in the hypotheses space – namely, you remove bias in the decision framework – well, you are faced with a significant number of possibilities that would result in a larger spread of outcomes. With that said, the expected value of those outcomes might actually be closer to reality, despite the variance – than a framework decided upon by applying heuristic or operating in a bias mode.

So how do we decide then? Jeff Bezos had mentioned something that I recall: some decisions are one-way street and some are two-way. In other words, there are some decisions that cannot be undone, for good or for bad. It is a wise man who is able to anticipate that early on to decide what system one needs to pursue. An organization makes a few big and important decisions, and a lot of small decisions. Identify the big ones and spend oodles of time and encourage a diverse set of input to work through those decisions at a sufficiently high level of detail. When I personally craft rolling operating models, it serves a strategic purpose that might sit on shifting sands. That is perfectly okay! But it is critical to evaluate those big decisions since the crux of the effectiveness of the strategy and its concomitant quantitative representation rests upon those big decisions. Cutting corners can lead to disaster or an unforgiving result!

I will focus on the big whale decisions now. I will assume, for the sake of expediency, that the series of small decisions, in the aggregate or by itself, will not sufficiently be large enough that it would take us over the precipice. (It is also important however to examine the possibility that a series of small decisions can lead to a more holistic unintended emergent outcome that might have a whale effect: we come across that in complexity theory that I have already touched on in a set of previous articles).

Cognitive Biases are the biggest mea culpas that one needs to worry about. Some of the more common biases are confirmation bias, attribution bias, the halo effect, the rule of anchoring, the framing of the problem, and status quo bias. There are other cognition biases at play, but the ones listed above are common in planning and execution. It is imperative that these biases be forcibly peeled off while formulating a strategy toward problem solving.

But then there are also the statistical biases that one needs to be wary of. How we select data or selection bias plays a big role in validating information. In fact, if there are underlying statistical biases, the validity of the information is questionable. Then there are other strains of statistical biases: the forecast bias which is the natural tendency to be overtly optimistic or pessimistic without any substantive evidence to support one or the other case. Sometimes how the information is presented: visually or in tabular format – can lead to sins of the error of omission and commission leading the organization and judgement down paths that are unwarranted and just plain wrong. Thus, it is important to be aware of how statistical biases come into play to sabotage your decision framework.

One of the finest illustrations of misjudgment has been laid out by Charlie Munger. Here is the excerpt link : https://fs.blog/great-talks/psychology-human-misjudgment/ He lays out a very comprehensive 25 Biases that ail decision making. Once again, stripping biases do not necessarily result in accuracy — it increases the variability of outcomes that might be clustered around a mean that might be closer to accuracy than otherwise.

Variability is Noise. We do not know a priori what the expected mean is. We are close, but not quite. There is noise or a whole set of outcomes around the mean. Viewing things closer to the ground versus higher would still create a likelihood of accepting a false hypothesis or rejecting a true one. Noise is extremely hard to sift through, but how you can sift through the noise to arrive at those signals that are determining factors, is critical to organization success. To get to this territory, we have eliminated the cognitive and statistical biases. Now is the search for the signal. What do we do then? An increase in noise impairs accuracy. To improve accuracy, you either reduce noise or figure out those indicators that signal an accurate measure.

This is where algorithmic thinking comes into play. You start establishing well tested algorithms in specific use cases and cross-validate that across a large set of experiments or scenarios. It has been proved that algorithmic tools are, in the aggregate, superior to human judgement – since it systematically can surface causal and correlative relationships. Furthermore, special tools like principal component analysis and factory analysis can incorporate a large input variable set and establish the patterns that would be impregnable for even System 2 mindset to comprehend. This will bring decision making toward the signal variants and thus fortify decision making.

The final element is to assess the time commitment required to go through all the stages. Given infinite time and resources, there is always a high likelihood of arriving at those signals that are material for sound decision making. Alas, the reality of life does not play well to that assumption! Time and resources are constraints … so one must make do with sub-optimal decision making and establish a cutoff point wherein the benefits outweigh the risks of looking for another alternative. That comes down to the realm of judgements. While George Stigler, a Nobel Laureate in Economics, introduce search optimization in fixed sequential search – a more concrete example has been illustrated in “Algorithms to Live By” by Christian & Griffiths. They suggested an holy grail response: 37% is the accurate answer. In other words, you would reach a suboptimal decision by ensuring that you have explored up to 37% of your estimated maximum effort. While the estimated maximum effort is quite ambiguous and afflicted with all of the elements of bias (cognitive and statistical), the best thinking is to be as honest as possible to assess that effort and then draw your search threshold cutoff.

An important element of leadership is about making calls. Good calls, not necessarily the best calls! Calls weighing all possible circumstances that one can, being aware of the biases, bringing in a diverse set of knowledge and opinions, falling back upon agnostic tools in statistics, and knowing when it is appropriate to have learnt enough to pull the trigger. And it is important to cascade the principles of decision making and the underlying complexity into and across the organization.

Chaos and the tide of Entropy!

We have discussed chaos. It is rooted in the fundamental idea that small changes in the initial condition in a system can amplify the impact on the final outcome in the system. Let us now look at another sibling in systems literature – namely, the concept of entropy. We will then attempt to bridge these two concepts since they are inherent in all systems.

Entropy arises from the law of thermodynamics. Let us state all three laws:

- First law is known as the Lay of Conservation of Energy which states that energy can neither be created nor destroyed: energy can only be transferred from one form to another. Thus, if there is work in terms of energy transformation in a system, there is equivalent loss of energy transformation around the system. This fact balances the first law of thermodynamics.

- Second law of thermodynamics states that the entropy of any isolated system always increases. Entropy always increases, and rarely ever decreases. If a locker room is not tidied, entropy dictates that it will become messier and more disorderly over time. In other words, all systems that are stagnant will inviolably run against entropy which would lead to its undoing over time. Over time the state of disorganization increases. While energy cannot be created or destroyed, as per the First Law, it certainly can change from useful energy to less useful energy.

- Third law establishes that the entropy of a system approaches a constant value as the temperature approaches absolute zero. Thus, the entropy of a pure crystalline substance at absolute zero temperature is zero. However, if there is any imperfection that resides in the crystalline structure, there will be some entropy that will act upon it.

Entropy refers to a measure of disorganization. Thus people in a crowd that is widely spread out across a large stadium has high entropy whereas it would constitute low entropy if people are all huddled in one corner of the stadium. Entropy is the quantitative measure of the process – namely, how much energy has been spent from being localized to being diffused in a system. Entropy is enabled by motion or interaction of elements in a system, but is actualized by the process of interaction. All particles work toward spontaneously dissipating their energy if they are not curtailed from doing so. In other words, there is an inherent will, philosophically speaking, of a system to dissipate energy and that process of dissipation is entropy. However, it makes no effort to figure out how quickly entropy kicks into gear – it is this fact that makes it difficult to predict the overall state of the system.

Chaos, as we have already discussed, makes systems unpredictable because of perturbations in the initial state. Entropy is the dissipation of energy in the system, but there is no standard way of knowing the parameter of how quickly entropy would set in. There are thus two very interesting elements in systems that almost work simultaneously to ensure that predictability of systems become harder.

Another way of looking at entropy is to view this as a tax that the system charges us when it goes to work on our behalf. If we are purposefully calibrating a system to meet a certain purpose, there is inevitably a corresponding usage of energy or dissipation of energy otherwise known as entropy that is working in parallel. A common example that we are familiar with is mass industrialization initiatives. Mass industrialization has impacts on environment, disease, resource depletion, and a general decay of life in some form. If entropy as we understand it is an irreversible phenomenon, then there is virtually nothing that can be done to eliminate it. It is a permanent tax of varying magnitude in the system.

Humans have since early times have tried to formulate a working framework of the world around them. To do that, they have crafted various models and drawn upon different analogies to lend credence to one way of thinking over another. Either way, they have been left best to wrestle with approximations: approximations associated with their understanding of the initial conditions, approximations on model mechanics, approximations on the tax that the system inevitably charges, and the approximate distribution of potential outcomes. Despite valiant efforts to reduce the framework to physical versus behavioral phenomena, their final task of creating or developing a predictable system has not worked. While physical laws of nature describe physical phenomena, the behavioral laws describe non-deterministic phenomena. If linear equations are used as tools to understand the physical laws following the principles of classical Newtonian mechanics, the non-linear observations marred any consistent and comprehensive framework for clear understanding. Entropy reaches out toward an irreversible thermal death: there is an inherent fatalism associated with the Second Law of Thermodynamics. However, if that is presumed to be the case, how is it that human evolution has jumped across multiple chasms and have evolved to what it is today? If indeed entropy is the tax, one could argue that chaos with its bounded but amplified mechanics have allowed the human race to continue.

Let us now deliberate on this observation of Richard Feynmann, a Nobel Laurate in physics – “So we now have to talk about what we mean by disorder and what we mean by order. … Suppose we divide the space into little volume elements. If we have black and white molecules, how many ways could we distribute them among the volume elements so that white is on one side and black is on the other? On the other hand, how many ways could we distribute them with no restriction on which goes where? Clearly, there are many more ways to arrange them in the latter case.

We measure “disorder” by the number of ways that the insides can be arranged, so that from the outside it looks the same. The logarithm of that number of ways is the entropy. The number of ways in the separated case is less, so the entropy is less, or the “disorder” is less.” It is commonly also alluded to as the distinction between microstates and macrostates. Essentially, it says that there could be innumerable microstates although from an outsider looking in – there is only one microstate. The number of microstates hints at the system having more entropy.

In a different way, we ran across this wonderful example: A professor distributes chocolates to students in the class. He has 35 students but he distributes 25 chocolates. He throws those chocolates to the students and some students might have more than others. The students do not know that the professor had only 25 chocolates: they have presumed that there were 35 chocolates. So the end result is that the students are disconcerted because they perceive that the other students have more chocolates than they have distributed but the system as a whole shows that there are only 25 chocolates. Regardless of all of the ways that the 25 chocolates are configured among the students, the microstate is stable.

So what is Feynmann and the chocolate example suggesting for our purpose of understanding the impact of entropy on systems: Our understanding is that the reconfiguration or the potential permutations of elements in the system that reflect the various microstates hint at higher entropy but in reality has no impact on the microstate per se except that the microstate has inherently higher entropy. Does this mean that the macrostate thus has a shorter life-span? Does this mean that the microstate is inherently more unstable? Could this mean an exponential decay factor in that state? The answer to all of the above questions is not always. Entropy is a physical phenomenon but to abstract this out to enable a study of organic systems that represent super complex macrostates and arrive at some predictable pattern of decay is a bridge too far! If we were to strictly follow the precepts of the Second Law and just for a moment forget about Chaos, one could surmise that evolution is not a measure of progress, it is simply a reconfiguration.

Theodosius Dobzhansky, a well known physicist, says: “Seen in retrospect, evolution as a whole doubtless had a general direction, from simple to complex, from dependence on to relative independence of the environment, to greater and greater autonomy of individuals, greater and greater development of sense organs and nervous systems conveying and processing information about the state of the organism’s surroundings, and finally greater and greater consciousness. You can call this direction progress or by some other name.”

Harold Mosowitz says “Life is organization. From prokaryotic cells, eukaryotic cells, tissues and organs, to plants and animals, families, communities, ecosystems, and living planets, life is organization, at every scale. The evolution of life is the increase of biological organization, if it is anything. Clearly, if life originates and makes evolutionary progress without organizing input somehow supplied, then something has organized itself. Logical entropy in a closed system has decreased. This is the violation that people are getting at, when they say that life violates the second law of thermodynamics. This violation, the decrease of logical entropy in a closed system, must happen continually in the Darwinian account of evolutionary progress.”

Entropy occurs in all systems. That is an indisputable fact. However, if we start defining boundaries, then we are prone to see that these bounded systems decay faster. However, if we open up the system to leave it unbounded, then there are a lot of other forces that come into play that is tantamount to some net progress. While it might be true that energy balances out, what we miss as social scientists or model builders or avid students of systems – we miss out on indices that reflect on leaps in quality and resilience and a horde of other factors that stabilizes the system despite the constant and ominous presence of entropy’s inner workings.