Blog Archives

The CFO as Chief Option Architect: Embracing Uncertainty

Part I: Embracing the Options Mindset

This first half explores the philosophical and practical foundation of real options thinking, scenario-based planning, and the CFO’s evolving role in navigating complexity. The voice is grounded in experience, built on systems thinking, and infused with a deep respect for the unpredictability of business life.

I learned early that finance, for all its formulas and rigor, rarely rewards control. In one of my earliest roles, I designed a seemingly watertight budget, complete with perfectly reconciled assumptions and cash flow projections. The spreadsheet sang. The market didn’t. A key customer delayed a renewal. A regulatory shift in a foreign jurisdiction quietly unraveled a tax credit. In just six weeks, our pristine model looked obsolete. I still remember staring at the same Excel sheet and realizing that the budget was not a map, but a photograph, already out of date. That moment shaped much of how I came to see my role as a CFO. Not as controller-in-chief, but as architect of adaptive choices.

The world has only become more uncertain since. Revenue operations now sit squarely in the storm path of volatility. Between shifting buying cycles, hybrid GTM models, and global macro noise, what used to be predictable has become probabilistic. Forecasting a quarter now feels less like plotting points on a trendline and more like tracing potential paths through fog. It is in this context that I began adopting and later, championing, the role of the CFO as “Chief Option Architect.” Because when prediction fails, design must take over.

This mindset draws deeply from systems thinking. In complex systems, what matters is not control, but structure. A system that adapts will outperform one that resists. And the best way to structure flexibility, I have found, is through the lens of real options. Borrowed from financial theory, real options describe the value of maintaining flexibility under uncertainty. Instead of forcing an all-in decision today, you make a series of smaller decisions, each one preserving the right, but not the obligation, to act in a future state. This concept, though rooted in asset pricing, holds powerful relevance for how we run companies.

When I began modeling capital deployment for new GTM motions, I stopped thinking in terms of “budget now, or not at all.” Instead, I started building scenario trees. Each branch represented a choice: deploy full headcount at launch or split into a two-phase pilot with a learning checkpoint. Invest in a new product SKU with full marketing spend, or wait for usage threshold signals to pass before escalation. These decision trees capture something that most budgets never do—the reality of the paths not taken, the contingencies we rarely discuss. And most importantly, they made us better at allocating not just capital, but attention. I am sharing my Bible on this topic, which was referred to me by Dr. Alexander Cassuto at Cal State Hayward in the Econometrics course. It was definitely more pleasant and easier to read than Jiang’s book on Econometrics.

This change in framing altered my approach to every part of revenue operations. Take, for instance, the deal desk. In traditional settings, deal desk is a compliance checkpoint where pricing, terms, and margin constraints are reviewed. But when viewed through an options lens, the deal desk becomes a staging ground for strategic bets. A deeply discounted deal might seem reckless on paper, but if structured with expansion clauses, usage gates, or future upsell options, it can behave like a call option on account growth. The key is to recognize and price the option value. Once I began modeling deals this way, I found we were saying “yes” more often, and with far better clarity on risk.

Data analytics became essential here not for forecasting the exact outcome, but for simulating plausible ones. I leaned heavily on regression modeling, time-series decomposition, and agent-based simulation. We used R to create time-based churn scenarios across customer cohorts. We used Arena to simulate resource allocation under delayed expansion assumptions. These were not predictions. They were controlled chaos exercises, designed to show what could happen, not what would. But the power of this was not just in the results, but it was in the mindset it built. We stopped asking, “What will happen?” and started asking, “What could we do if it does?”

From these simulations, we developed internal thresholds to trigger further investment. For example, if three out of five expansion triggers were fired, such as usage spike, NPS improvement, and additional department adoption, then we would greenlight phase two of GTM spend. That logic replaced endless debate with a predefined structure. It also gave our board more confidence. Rather than asking them to bless a single future, we offered a roadmap of choices, each with its own decision gates. They didn’t need to believe our base case. They only needed to believe we had options.

Yet, as elegant as these models were, the most difficult challenge remained human. People, understandably, want certainty. They want confidence in forecasts, commitment to plans, and clarity in messaging. I had to coach my team and myself to get comfortable with the discomfort of ambiguity. I invoked the concept of bounded rationality from decision science: we make the best decisions we can with the information available to us, within the time allotted. There is no perfect foresight. There is only better framing.

This is where the law of unintended consequences makes its entrance. In traditional finance functions, overplanning often leads to rigidity. You commit to hiring plans that no longer make sense three months in. You promise CAC thresholds that collapse under macro pressure. You bake linearity into a market that moves in waves. When this happens, companies double down, pushing harder against the wrong wall. But when you think in options, you pull back when the signal tells you to. You course-correct. You adapt. And paradoxically, you appear more stable.

As we embedded this thinking deeper into our revenue operations, we also became more cross-functional. Sales began to understand the value of deferring certain go-to-market investments until usage signals validated demand. Product began to view feature development as portfolio choices: some high-risk, high-return, others safer but with less upside. Customer Success began surfacing renewal and expansion probabilities not as binary yes/no forecasts, but as weighted signals on a decision curve. The shared vocabulary of real options gave us a language for navigating ambiguity together.

We also brought this into our capital allocation rhythm. Instead of annual budget cycles, we moved to rolling forecasts with embedded thresholds. If churn stayed below 8% and expansion held steady, we would greenlight an additional five SDRs. If product-led growth signals in EMEA hit critical mass, we’d fund a localized support pod. These weren’t whims. They were contingent commitments, bound by logic, not inertia. And that changed everything.

The results were not perfect. We made wrong bets. Some options expired worthless. Others took longer to mature than we expected. But overall, we made faster decisions with greater alignment. We used our capital more efficiently. And most of all, we built a culture that didn’t flinch at uncertainty—but designed for it.

In the next part of this essay, I will go deeper into the mechanics of implementing this philosophy across the deal desk, QTC architecture, and pipeline forecasting. I will also show how to build dashboards that visualize decision trees and option paths, and how to teach your teams to reason probabilistically without losing speed. Because in a world where volatility is the only certainty, the CFO’s most enduring edge is not control, but it is optionality, structured by design and deployed with discipline.

Part II: Implementing Option Architecture Inside RevOps

A CFO cannot simply preach agility from a whiteboard. To embed optionality into the operational fabric of a company, the theory must show up in tools, in dashboards, in planning cadences, and in the daily decisions made by deal desks, revenue teams, and systems owners. I have found that fundamental transformation comes not from frameworks, but from friction—the friction of trying to make the idea work across functions, under pressure, and at scale. That’s where option thinking proves its worth.

We began by reimagining the deal desk, not as a compliance stop but as a structured betting table. In conventional models, deal desks enforce pricing integrity, review payment terms, and ensure T’s and C’s fall within approved tolerances. That’s necessary, but not sufficient. In uncertain environments—where customer buying behavior, competitive pressure, or adoption curves wobble without warning: rigid deal policies become brittle. The opportunity lies in recasting the deal desk as a decision node within a larger options tree.

Consider a SaaS enterprise deal involving land-and-expand potential. A rigid model forces either full commitment upfront or defers expansion, hoping for a vague “later.” But if we treat the deal like a compound call option, we see more apparent logic. You price the initial land deal aggressively, with usage-based triggers that, when met, unlock favorable expansion terms. You embed a re-pricing clause if usage crosses a defined threshold in 90 days. You insert a “soft commit” expansion clause tied to the active user count. None of these is just a term. They are embedded with real options. And when structured well, they deliver upside without requiring the customer to commit to uncertain future needs.

In practice, this approach meant reworking CPQ systems, retraining legal, and coaching reps to frame options credibly. We designed templates with optionality clauses already coded into Salesforce workflows. Once an account crossed a pre-defined trigger say, 80% license utilization, then the next best action flowed to the account executive and customer success manager. The logic wasn’t linear. It was branching. We visualized deal paths in a way that corresponds to mapping a decision tree in a risk-adjusted capital model.

Yet even the most elegant structure can fail if the operating rhythm stays linear. That is why we transitioned away from rigid quarterly forecasts toward rolling scenario-based planning. Forecasting ceased to be a spreadsheet contest. Instead, we evaluated forecast bands, not point estimates. If base churn exceeded X% in a specific cohort, how did that impact our expansion coverage ratio? If deal velocity in EMEA slowed by two weeks, how would that compress the bookings-to-billings gap? We visualized these as cascading outcomes, not just isolated misses.

To build this capability, we used what I came to call “option dashboards.” These were layered, interactive models with inputs tied to a live pipeline and post-sale telemetry. Each card on the dashboard represented a decision node—an inflection point. Would we deploy more headcount into SMB if the average CAC-to-LTV fell below 3:1? Would we pause feature rollout in one region to redirect support toward a segment with stronger usage signals? Each choice was pre-wired with boundary logic. The decisions didn’t live in a drawer—they lived in motion.

Building these dashboards required investment. But more than tools, it required permission. Teams needed to know they could act on signal, not wait for executive validation every time a deviation emerged. We institutionalized the language of “early signal actionability.” If revenue leaders spotted a decline in renewal health across a cluster of customers tied to the same integration module, they didn’t wait for a churn event. They pulled forward roadmap fixes. That wasn’t just good customer service, but it was real options in flight.

This also brought a new flavor to our capital allocation rhythm. Rather than annual planning cycles that locked resources into static swim lanes, we adopted gated resourcing tied to defined thresholds. Our FP&A team built simulation models in Python and R, forecasting the expected value of a resourcing move based on scenario weightings. For example, if a new vertical showed a 60% likelihood of crossing a 10-deal threshold by mid-Q3, we pre-approved GTM spend to activate contingent on hitting that signal. This looked cautious to some. But in reality, it was aggressive and in the right direction, at the right moment.

Throughout all of this, I kept returning to a central truth: uncertainty punishes rigidity, but rewards those who respect its contours. A pricing policy that cannot flex will leave margin on the table or kill deals in flight. A hiring plan that commits too early will choke working capital. And a CFO who waits for clarity before making bets will find they arrive too late. In decision theory, we often talk about “the cost of delay” versus “the cost of error.” A good options model minimizes both, which, interestingly, is not by being just right, but by being ready.

Of course, optionality without discipline can devolve into indecision. We embedded guardrails. We defined thresholds that made decision inertia unacceptable. If a cohort’s NRR dropped for three consecutive months and win-back campaigns failed, we sunsetted that motion. If a beta feature was unable to hit usage velocity within a quarter, we reallocated the development budget. These were not emotional decisions, but they were logical conclusions of failed options. And we celebrated them. A failed option, tested and closed, beats a zombie investment every time.

We also revised our communication with the board. Instead of defending fixed forecasts, we presented probability-weighted trees. “If churn holds, and expansion triggers fire, we’ll beat target by X.” “If macro shifts pull SMB renewals down by 5%, we stay within plan by flexing mid-market initiatives.” This shifted the conversation from finger-pointing to scenario readiness. Investors liked it. More importantly, so did the executive team. We could disagree on base assumptions but still align on decisions because we’d mapped the branches ahead of time.

One area where this thought made an outsized impact was compensation planning. Sales comp is notoriously fragile under volatility. We redesigned quota targets and commission accelerators using scenario bands, not fixed assumptions. We tested payout curves under best, base, and downside cases. We then ran Monte Carlo simulations to see how frequently actuals would fall into the “too much upside” or “demotivating downside” zones. This led to more durable comp plans, which meant fewer panicked mid-year resets. Our reps trusted the system. And our CFO team could model cost predictability with far greater confidence.

In retrospection, all these loops back to a single mindset shift: you don’t plan to be right. You plan to stay in the game. And staying in the game requires options that are well-designed, embedded into the process, and respected by every function. Sales needs to know they can escalate an expansion offer once particular customer signals fire. Success needs to know they have the budget authority to engage support when early churn flags arise. Product needs to know they can pause a roadmap stream if NPV no longer justifies it. And finance needs to know that its most significant power is not in control, but in preparation.

Today, when I walk into a revenue operations review or a strategic planning offsite, I do not bring a budget with fixed forecasts. I get a map. It has branches. It has signals. It has gates. And it has options, and each one designed not to predict the future, but to help us meet it with composure, and to move quickly when the fog clears.

Because in the world I have operated in, spanning economic cycles, geopolitical events, sudden buyer hesitation, system failures, and moments of exponential product success since 1994 until now, one principle has held. The companies that win are not the ones who guess right. They are the ones who remain ready. And readiness, I have learned, is the true hallmark of a great CFO.

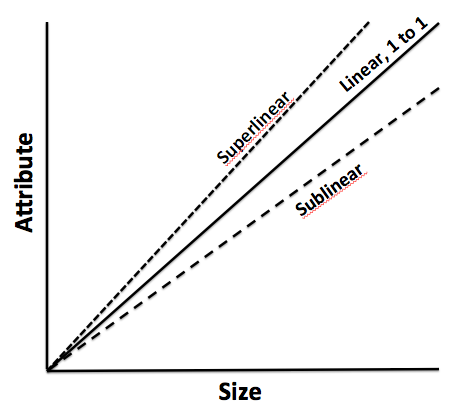

Internal versus External Scale

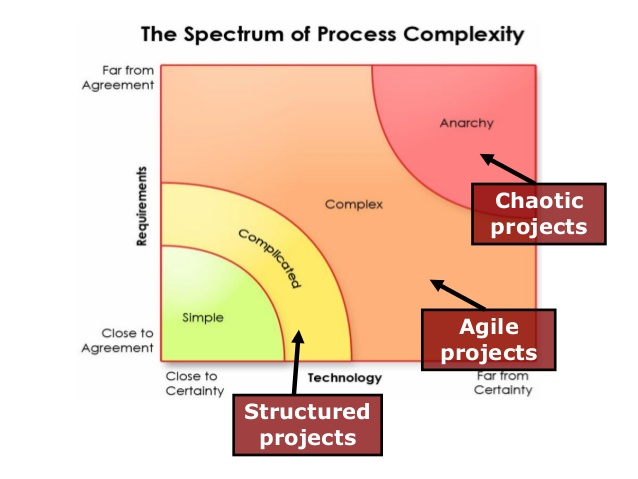

This article discusses internal and external complexity before we tee up a more detailed discussion on internal versus external scale. This chapter acknowledges that complex adaptive systems have inherent internal and external complexities which are not additive. The impact of these complexities is exponential. Hence, we have to sift through our understanding and perhaps even review the salient aspects of complexity science which have already been covered in relatively more detail in earlier chapter. However, revisiting complexity science is important, and we will often revisit this across other blog posts to really hit home the fundamental concepts and its practical implications as it relates to management and solving challenges at a business or even a grander social scale.

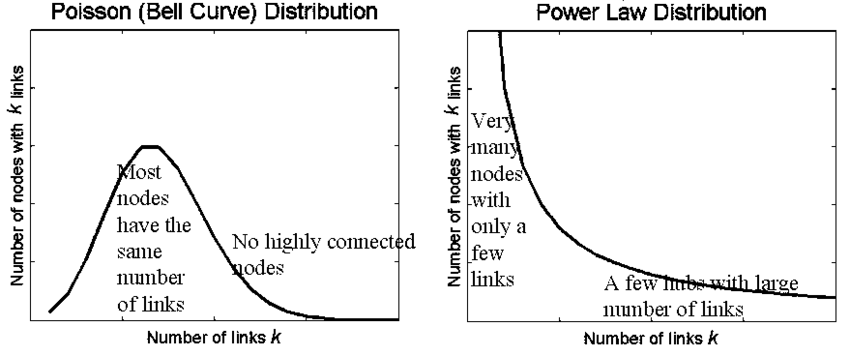

A complex system is a part of a larger environment. It is a safe to say that the larger environment is more complex than the system itself. But for the complex system to work, it needs to depend upon a certain level of predictability and regularity between the impact of initial state and the events associated with it or the interaction of the variables in the system itself. Note that I am covering both – complex physical systems and complex adaptive systems in this discussion. A system within an environment has an important attribute: it serves as a receptor to signals of external variables of the environment that impact the system. The system will either process that signal or discard the signal which is largely based on what the system is trying to achieve. We will dedicate an entire article on system engineering and thinking later, but the uber point is that a system exists to serve a definite purpose. All systems are dependent on resources and exhibits a certain capacity to process information. Hence, a system will try to extract as many regularities as possible to enable a predictable dynamic in an efficient manner to fulfill its higher-level purpose.

Let us understand external complexities. We can interchangeably use the word environmental complexity as well. External complexity represents physical, cultural, social, and technological elements that are intertwined. These environments beleaguered with its own grades of complexity acts as a mold to affect operating systems that are mere artifacts. If operating systems can fit well within the mold, then there is a measure of fitness or harmony that arises between an internal complexity and external complexity. This is the root of dynamic adaptation. When external environments are very complex, that means that there are a lot of variables at play and thus, an internal system has to process more information in order to survive. So how the internal system will react to external systems is important and they key bridge between those two systems is in learning. Does the system learn and improve outcomes on account of continuous learning and does it continually modify its existing form and functional objectives as it learns from external complexity? How is the feedback loop monitored and managed when one deals with internal and external complexities? The environment generates random problems and challenges and the internal system has to accept or discard these problems and then establish a process to distribute the problems among its agents to efficiently solve those problems that it hopes to solve for. There is always a mechanism at work which tries to align the internal complexity with external complexity since it is widely believed that the ability to efficiently align the systems is the key to maintaining a relatively competitive edge or intentionally making progress in solving a set of important challenges.

Internal complexity are sub-elements that interact and are constituents of a system that resides within the larger context of an external complex system or the environment. Internal complexity arises based on the number of variables in the system, the hierarchical complexity of the variables, the internal capabilities of information pass-through between the levels and the variables, and finally how it learns from the external environment. There are five dimensions of complexity: interdependence, diversity of system elements, unpredictability and ambiguity, the rate of dynamic mobility and adaptability, and the capability of the agents to process information and their individual channel capacities.

If we are discussing scale management, we need to ask a fundamental question. What is scale in the context of complex systems? Why do we manage for scale? How does management for scale advance us toward a meaningful outcome? How does scale compute in internal and external complex systems? What do we expect to see if we have managed for scale well? What does the future bode for us if we assume that we have optimized for scale and that is the key objective function that we have to pursue?